AnalysisMeaningless words: Dangerous conversations with ChatGPT

Analysis

Meaningless words: Dangerous conversations with ChatGPT

ChatGPT, OpenAI's eloquent chatbot, has managed to grab the internet's attention with the confident dialogue it simulates. But the sophistication of such Generative Tech obscures the fact that the bot has no understanding of the world and that the texts it produces have no meaning

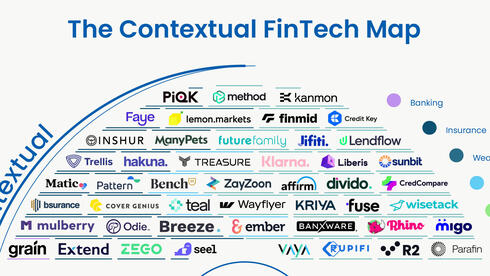

1. If you look at the last few years, it is possible that the legs of venture capital investors are tired - after all, it is obvious that they are constantly standing in line. Lining up to invest in the new crypto venture, the next star of autonomous driving, the new Metaverse project, augmented reality, the Uber of animals, smart cities, cleantech, fintech, genetically engineered food or - fill in the blank for whatever could be the "next big thing". The current buzzword and new Silicon Valley craze is generative artificial intelligence. At the giant VC fund Sequoia, they compared the models to smart mobile devices, and they, too, will unleash a burst of new applications on us: "The race is on," they announced without shame.

The company attracting the biggest interest is OpenAI, which launched a text generator called ChatGPT, which has already registered over one million users. The astonishment around it seems almost unanimous, screenshots of conversations with the chat flooded the social networks and some Silicon Valley executives expressed their enthusiasm. Paul Graham, co-founder of the most famous accelerator Y Combinator, tweeted that "obviously something big is happening"; Elon Musk tweeted that "ChatGPT is scary good. We are not far from dangerously strong AI."; And the co-founder of the cloud company Box, Aaron Levie, said that "ChatGPT is one of those rare moments in technology where you see a glimmer of how everything is going to be different going forward."

What is generative artificial intelligence? What can this chatbot do that others can't and what can't it do? What expectation does it inspire and what power does it have that does not receive any attention? A Guide to the Perplexed by the chatbot that broke the internet, then rewrote it. It seems reasonable, roughly, and there are also those who believe that it will change the future. Sure, maybe.

2. Generative artificial intelligence (Generative AI) refers to algorithms that are able to produce new content - textual, visual or auditory - with relatively little human intervention. These applications are not entirely new, but the last year was significant for the field, because it was the first in which high-quality applications were distributed to the general public to try at no cost (or at an extremely low cost). Among others, the DALL-E 2 image generator (also from the creator of OpenAI), the Imagen image generator and Google's LaMDA language, Stable Diffusion and Midjourney.

The OpenAI chatbot is designed to interact in the form of a conversation. "The dialog format allows ChatGPT to answer follow-up questions, admit mistakes, challenge false assumptions, and reject inappropriate requests," reads the website of OpenAI, a for-profit organization. Although bots are not a new phenomenon, ChatGPT stands out because the answers it provides leave a strong impression that they are relevant, good, reasoned and well-articulated. The model even "remembers" the sequence of the conversation itself so that the feeling of a more natural conversation is created (by the way, only in English). "I have no physical form, and I only exist as a computer program," the bot replied when I asked it about its essence and added, "I am not a person and I do not have the ability to think or act on my own." This sleek query mechanism has led some users to declare that "Google is dead" and the chat signifies a tectonic shift approaching the job market. Others suggested that the bot, or at least if we harness its skill, could fundamentally change a series of professions. Among other things, law, customer service, programming, research in general and academia in particular, financial services, everything related to content writing including marketing, literature or journalism, and even the teaching professions.

To explore the practical possibilities of these models, you have to start from the basics - how do chatbots and the like work? As the name suggests, the chat works using a natural language model (NLP) from the 3-GPT family. The model, in short, is a statistical tool for predicting words in a sequence. That is, after training on large data sets, the models guess in a sophisticated way which word is likely to come after which word (and sentence after sentence). 3-GPT is a large language model, and has 10 times more parameters (the values that the network tries to optimize in training) than any previous model. 3-GPT was built on 45 terabytes of data, from sources as diverse as Wikipedia, two corpora of books, and raw web page scans. The model even undergoes a process known as "fine tuning", in which it is trained on a set of instructions and the expected responses to the instructions. The company's website explains a little about how the chatbot is trained, this includes entering written human answers into the GPT as training data, followed by using the "reinforcement learning" (reward-punishment) method to get the model to provide better answers. That's more or less what we know about the model, and when I thought I might get more information if I asked ChatGPT about it, I got a general answer (repeatedly) that it can't answer because this information is "property of OpenAI".

Even without knowing all the details, it is clear that the databases on which the model was built contain more information than one person could ever be exposed to in their lifetime. This intensive learning allows the model, given a certain input text, to probabilistically determine what vocabulary it knows will come next. This is not only the word number 50 that is statistically the most likely, but also the likely word in terms of other parameters, such as writing style or tone.

Emphasis on how these types of models work includes words like "statistics" and "probability", not "language" or "meaning". Because even though the model knows how to build convincing combinations, the result it produces is not related and does not intentionally communicate any idea about the world and the literal meaning of what it produces has no intention - the chatbot does not try to communicate anything. The combinations that the model puts together in a calculated (superior) way become meaningful only when someone can attribute meaning to them, that is, only when a person from the other side reads them. This is completely different from a person who says what he says, or writes what he writes, in order to convey a deliberate idea. This is a key point to understand the possibilities inherent in ChatGPT and other tools. These models can realistically imitate natural language and be incredibly accurate in predicting the words or phrases that are likely to come later in a sentence or conversation. But that's all they do - they don't understand what they're saying, and despite the misleading name they've been given as "artificial intelligence", they have no mind or intelligence.

Researchers Emily Bender, Timnit Gebru, and others suggest describing the process and models as "stochastic parroting". Parrot, because it only repeats what was fed to it without any understanding of the meaning, and stochastic, because the process itself is random. "The created text is not based on a communicative intention, a model of the world, or a model of the reader's mental state," they wrote in an article from 2021 and explain that what they envision is actually a system for randomly combining sequences of linguistic forms, "according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot."

3. The coherence of the models creates a feeling as if the machine "understands" what the person is asking from the other side, and we understand its answers in this context. This is an intuitive and logical move: language sets the human race apart from all others, and when we see a machine that is close to achieving or even has actually achieved this ability, we tend to imagine, parallel and project human intelligence as we understand it onto machines. This conceptualization may occur intuitively, but we are also pushed by the model developers to think that an algorithm that "manipulates a linguistic form" has also "acquired a linguistic system." For example, a few days after the launch of ChatGPT, OpenAI CEO Sam Altman tweeted: "I'm a stochastic parrot, and so are you." Google CEO Sundar Pichai did the same, but more elegantly, who, upon the launch of their chat LaMDA, explained that "Let's say you wanted to learn about one of my favorite planets, Pluto. LaMDA already knows quite a bit about Pluto and a million other topics." We are not parrots, and the bots do not "understand". These false parallels are what a pair of researchers summarized in their study as a metaphor that "attributes to the human brain less complexity than it deserves and attributes to the computer more wisdom than it should."

It is not the position of "skeptics" to state that the models do not control human language or that they are not intelligent, ChatGPT really does not understand meaning, context, what it is asked or what it answers. This is the reason why the outputs of all these generators are mainly used as "tools that help the human creative process" (as opposed to a tool that replaces the human creative process). But what about in the future? Can the tools be expected to improve so much that they surpass human creativity, be good enough to be used as a final product? Will it be possible to use them as a search engine, journalistic tool or travel recommendation tool? It is difficult to determine. What can be done is to put limits on the depth of deployment of the technology, compared to the enormous hype it is getting.

Let's start with the idea that chat can be used as a tool to replace writing articles, news stories or any content designed to reflect facts or reasoned ideas such as legal documents, licensing agreements or contracts or to help a student prepare their homework. ChatGPT and its ilk can't bother with factual writing, or at least it's better not to bother. This is because even though the bot is exposed to a huge amount of data, it does not have access to facts in the way we deal with facts, simply because the bot does not "understand" what facts are, cannot make judgments about the validity of claims - and cannot cite or refer to sources (quality or not) on which it relied. The only way to insist that its statements are correct, is only by chance that the questioner is knowledgeable about the facts.

All of this is happening in an age where bots are constantly being deployed by malicious actors—whether it's fake news or product reviews. A problem so well known, that most of us already invest a special effort to decipher what is true and what is not - and look for the human and authentic "writer" or "recommender". OpenAI knows how dangerous it is to rely on their bot for a factual search, and raises this as the first point in the "limitations" of the model: "ChatGPT sometimes writes answers that sound reasonable, but in fact they are incorrect or nonsense. Correcting this problem is challenging, since... supervised training misleads the model because the ideal answer depends on what the model knows, not what the human side knows."

What about search? Getting quick answers to simple questions is a convenient way to navigate the world. It is customary to ask virtual assistants "What's the weather?" or "What time is it in New York?". As long as the questions are simple, there is nothing wrong with technological convenience. But getting direct answers to complex questions by a bot replicating language patterns is a dangerous way to search for information. Not only can the answers be wrong or irrelevant, they can actually - due to our tendency to adopt tools - be able to obscure the complexity of the world. No more effort to examine diverse answers among the search results, to strive for technological literacy to find out the truth from the lie, the fact from the fiction, to research subjects about which the question is not really clear to the searcher himself, or the answer is not historically agreed upon. All we get is an unreasoned algorithmic decision.

What about content writing? In the last week it has been trendy to share various ChatGPT outputs on social networks as a substitute for creating more or less creative content. Paragraphs upon paragraphs designed to display algorithmic creativity in poetry, journalistic writing, academic research or prose. Sometimes the content on social networks is meant to tease the readers, and ends with a sentence of poetic venom — how this post explaining what ChatGPT is was actually written by ChatGPT. It's hard to know how many random readers actually fell into the trap and thought the text was created by a human, but even when the generator was successful in its task, it still created a sense of lack of depth, sometimes producing hollow text that looked more like "copy paste" than original or current work. This does not mean that its use cannot be integrated where depth is not needed, for example in reports on the result of a football match or the weather.

4. These problems will not be solved if the model is exposed to larger databases, is equipped with natural language models with more parameters, and is "stronger". Not everything can be solved with size or computing power. But computing power is definitely still part of the conversation. Training a 3-GPT costs $12 million, and one 2019 paper from the University of Massachusetts found that training one model produces the equivalent amount of carbon dioxide as five cars over their average lifetime. The meaning of the enormous economic, energetic, and environmental costs is that these tools are the exclusive development of huge companies or well-financed ventures. And when you invest capital, you also expect to see income. The general public is not the direct source of income for these applications. The public is only the target in the chain in the hands of other companies that may integrate them wherever possible. This is why the credibility we attribute to these chats is so significant, therefore it is especially important to pay attention to this credibility framework in place, and to establish a clear regulatory framework regarding the use of these tools. If an ethical framework is not established for the use of these tools, what we will get is the mass distribution of a tool that lowers the costs of content production, and because of which it is necessary to invest additional energy and develop new skills in order to differentiate between truth and falsehood. Good luck to us.

* * *

Who is behind the bot?

Open AI seeks "safe" artificial intelligence

In 2015, OpenAI was established as a non-profit organization by a series of Silicon Valley investors including Elon Musk, Sam Altman and Peter Thiel. The company's initial mission is to "discover and realize the path to safe artificial general intelligence" (meaning to develop a computer with consciousness) and then share these products with the world.

The idea was that it would be better to develop potentially destructive technology in the "right" hands (that is, theirs and the team they choose), instead of it being developed exclusively by for-profit companies, such as Google and Facebook.

However, in 2019 the organization changed its vocation to profit in order to attract capital and they did - a billion dollars from Microsoft, which also received access to the range of patents and intellectual property protected products it developed.

According to the new model, OpenAI has committed to pay each of its investors up to 100 times their investment, any profit above that will return to the public.