Mind over Matter: The man who turned himself into a cyborg to overcome ALS

After Peter Scott-Morgan was given a year to live, he decided to fight his fate and employed cutting edge technology to stay alive and remain human

One evening four years ago Dr. Peter Scott Morgan got out of his bathtub and pulled out a towel. While drying his foot, he noticed he was having difficulty moving it. The scary and worrying notion sent him straight to the doctors. After a year of tests, the diagnosis arrived and it was a horrible one: Scott Morgan has Amyotrophic Lateral Sclerosis (ALS), also known as Lou Gehrig's disease, a cruel and incurable ailment in which people affected suffer from the gradual decline of muscle control and which slowly paralyzes them, organ by organ, apart from the brain, which continues to function normally “trapped” in the body until death. The doctors determined he had two years left to live.

A reasonable person might have accepted the terrible outcome as fate, but not Peter Scott-Morgan— a genius mathematician and a world-class expert on robotics and advanced systems analysis, who taught at some of the leading universities in the world and consulted government and major corporations. As an expert in robotics, Scott-Morgan decided to cope with the new circumstances forced upon him and keep himself alive, by turning himself into a robot. Actually the more accurate term would be a cyborg— half man, half machine. Or as he put it in an interview with the Daily Telegraph: “I intend to become a human guinea pig to see just how far we can turn science fiction into a reality.”

Based on cold scientific observation, Scott-Morgan realizes that his living organs are slowly losing any ability to benefit him, and he is producing substitutes for them. In a series of complex surgeries, combining technology from the fields of medicine and computing, he is gradually replacing his dying organs with artificial ones. Peter1.0— the fully human version of Scott-Morgan— began the process of extraction from his bodily limitations in 2018, two months after the initial diagnosis. He underwent a complicated procedure, in which he inserted three tubes into his body: one that transfers food into his stomach and two to drain urine and feces out of it. It was a procedure rarely attempted in medical history and required special permits from the U.K. health authorities.

How to maintain your voice, when the words run out?

Scott-Morgan’s saga calls to mind that of the world’s most famous ALS victim, astrophysicist Stephen Hawking, who like Scott-Morgan was a scientist and a Brit. Hawking used his status in the scientific community to raise awareness of the disease and promote technological solutions to improve the lives of those who suffer from it, allowing them greater levels of independence. Scott-Morgan cites him as an inspiration, drawing strength and motivation from his famous quote “concentrate on things your disability doesn't prevent you doing well, and don't regret the things it interferes with.”

Hawking managed to survive for 50 years with ALS. Scott-Morgan has already lived 50% longer than doctors predicted.

While recovering from the remarkable surgery, Scott-Morgan put his mind to the more technical aspects of his journey. A special Scottish sound laboratory teamed up with him to ensure he could continue to use his own voice even after he loses his ability to speak. For several weeks, he visited their recording studio and read out full texts and individual words, varying his intonations. The goal was to produce a broad databank of voice recordings, with multiple inflections to reflect as best as possible the various tones of voice he uses and synthesize them into an artificial voice that is as close as possible to the source and not as robotic-sounding as Hawking’s famously was.

As he gradually lost all ability to move his lower body, Scott-Morgan was provided with a special motorized wheelchair, that enabled him to stand up and turn around. The chair is the foundation of some of the unique features of Scott-Morgan’s self-designed exoskeleton: a mechanical, computer-guided device that will be installed on his arms, decipher electrical pulses sent from the brain and translate them into near-full motion of his upper limbs. Combined with the chair’s ability to move freely and shift from sitting to standing positions, the full system was supposed to provide him with near-complete mobility. Unfortunately, Scott-Morgan’s health took a turn for the worse faster than expected and the exoskeleton was scrapped before it could be completed.

What takes place in the brain when we imagine saying a word?

The most complicated and daring stage in the entire process was Scott-Morgan’s attempt to find a solution for brain atrophy and developing the ability to transfer the most important organ of all into the amassing cyborg. It required a powerful computation network that could enable him to relay his thoughts and express them freely even in the final stages of the disease, when he is completely trapped in his paralysed body.

It was Intel’s Assistive Context-Aware Toolkit (ACAT) that was selected to carry out the complex task. It is the same system that Hawking had attached to his wheelchair and controlled via a proximity sensor in his glasses. One of the last muscles Hawking could still move was in his cheek. The sensor would detect its movement and allowed him to browse the internet, write books and journal articles, teach students, play chess and, of course, produce his distinctive metallic voice.

“He wanted complete control over every little detail, every word and every letter,” Lama Nachman, the director of Intel’s Anticipatory Computing Lab, who designed Hawking’s system and worked closely with him during his final years, said in an interview to Calcalist. “It is something that the technology at the time, and even today, doesn’t allow for and we had many discussions surrounding his frustration about it.”

Nachman is also the person who designed the system that Scot-Morgan uses. In recent years the field of machine learning, considered the forefront of artificial intelligence, experienced major progress. It has allowed Lachman and the team of engineers at Intel to make improvements on ACAT and bring Scott-Morgan closer to his cyborg vision, in which man and machine function as one, without the machine hampering his humanity and without the human holding back the machine.

The system enables people who suffer from ALS to conduct a conversation with the help of an eye-operated virtual mouse, with the eye motions replacing the paralysed hand. A sensor connected to a computer links the pupil’s motion to an icon on the screen, allowing the user to select words. An AI based system offers the user automatic word completion, similar to the autocomplete function that exists in smartphones. The result may not be completely accurate, but it enables them to conduct an almost normal conversation.

“If Peter wants to write a book, he’ll have all the time in the world to write each word by himself and be in complete control,” said Nachman. “But when you hold a conversation, the social connection and the ability to spontaneously engage are far more important. That is an ability people with ALS lack. The system may be less precise in reflecting Peter’s meaning, but it allows him to hold a conversation like anybody else.”

When you limit a person’s vocabulary, you warp their will

“The moment speed requires you to choose from several existing options, the system will gradually warp in directions you don’t want it to. That’s why we are now working on an interface that will allow Peter to rank his choices. If, because of speed considerations, he choses a less than optimal word, he will be able to immediately mark it. That note will be recorded and after the conversation is over, he will be able to do some damage control and provide feedback on the way the system conducted the interaction, thereby offering it an opportunity to learn him better for next time. He can also write ‘I wouldn't answer in that way’ and offer an alternative. With time the system will get to know him and his preferences better and better reflect his personality.”

Will the day ever arrive when the system can completely reflect the person who operates it?

“It depends how open you are to probing. Our system is based on going into the skull and monitoring brain activity. But there is a lot of innovation in the field of brain imaging, like encoding the electric activity in the brain using a fMRI device to decipher what the user is really thinking at any given moment: they are shown a picture of a cat, for example, and map the part of the brain that ‘lights up.’ Then they’re shown a picture of a goat and map that reaction. In this way artificial intelligence will one day be able to be combined with imaging systems. There is a lot of work being done, but we are still far from mind-reading,” said Nachman.

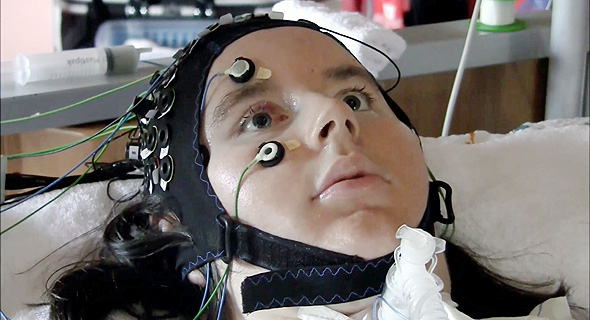

Most of the developments in the field are based on the P300 transfer learning algorithm, which is already helping ALS patients in worse condition than Scott-Morgan, who can’t even move their pupils. A monitor placed in front of their eyes presents a chart of letters that flash, one line or column at a time. When the letter that the user choses flashes, their brain reacts within a 300th of a second (thus the name). The algorithm detects the data using an EEG electrode attached to the user’s head and translates the signal to the stroke of a keyboard. In this way, even someone who’s lost all mobility can still communicate with the outside world.

Over the years this technology developed into the recording of “Motor Imagination”-- tracing the brain’s activity when the user imagines moving a limb or a muscle, like their tongue, for example”-- and “Mental Imaging,” tracing their brain activity when thinking about pronouncing a certain syllable. That’s the closest we’ve gotten to mindreading, but the technology is still far from enabling people who suffer from ALS to move their limbs or utter sounds freely. Brain researchers and computer scientists around the world are hard at work developing these capabilities into applicable technology. One of the teams that is working on it is active here in Israel, led by Dr. Oren Shriki who heads the Department of Cognitive and Brain Sciences at Ben Gurion University of the Negev in collaboration with scientists from most of Israel’s universities.

How do you decipher feelings from two simple parameters?

Innovation is a way of life for Peter Scott-Morgan. His PhD from the Royal Imperial College was the first ever granted in Britain for the field of robotics. In 1984, when he was only 26, he published his first book “The Robotics Revolution” which was one of the leading English language books in the field. He came out as gay when he was a teenager in the conservative U.K. of the seventies, and is currently in a relationship with Francis, his partner of 40 years. The two were married in 2005, on the first day that the law permitted same-sex marriage. Today they manage a public fund promoting technological entrepreneurship to help people overcome a range of disabilities in addition to ALS. “We’ve always had the mantra of turning liabilities into assets, and motor neurone disease is no exception,” he said in an interview to U.K. Channel 4.

Roughly a year ago, Scott-Morgan underwent his last procedure, for now, on his journey to become “Peter 2.0” as he calls it. The reason for it was a deterioration in his condition — his upper body began atrophying rapidly, robbing him of the little motion he had. In October 2019, the month when doctors had initially predicted his death, he entered the operating room where his windpipe and esophagus were separated and an additional, fourth, tube was inserted into his body, through a hole in his throat that helps him breathe with the aid of a respirator. The procedure forced him into a period of temporary muteness, which ended after several months with the installation of the artificial speech system designed by Nachman.

The main update to the system since Hawking’s time is that while the older version could only “speak” for Hawking, Scott-Morgan’s system also detects what is being said to him. Using artificial intelligence it can analyze the other person’s speech to better fine tune the algorithm and the answers he gives, speeding up the conversation. The next stage in the system’s development, which is being worked on at Intel’s labs now, is expanding its use from individual words to add the wider context of the conversation. In other words, expressing emotion. While Scott-Morgan can speak and express himself relatively freely, his emotional world still has difficulty breaking out of the walls erected by the disease.

“As a quick solution, we enabled Peter to tag parts of his texts under specific emotions, map them out and include them in his voice and the expressions of the avatar that’s on the monitor connected to his chair,” Nachman explained. “If you simply want to say ‘I’m angry now’ and use that emotional status for the entirety of the conversation, it’s relatively easy. The problems arise when you try to provide higher emotional resolution. It’s a significant step that we’re not yet sure about.”

How do you even measure emotions?

“Frankly, it is pretty easy to measure feelings. Emotions revolve around intensity and positive or negative values. By cross-referencing both, you can well determine the emotion. The signals you usually use are tone, facial expressions, and physiological measurements, such as, heart-rate, ECG, or skin conductivity. The first two signals are irrelevant for ALS patients, but the other indicators are very useful in determining intensity of emotion, while value can be expressed in words. Conducting analysis on the combination of all the signals, provides a result that can let you understand a person’s emotional state.

The problem in this case is a philosophical one, not a technological one: we don’t always want to express the emotion we’re actually feeling. "Sometimes, we want to hide our real emotion and replace it with another,” Nachman said, for example, feeling anger, but showing only equanimity. “If in the future, we will be able to analyze the physiological signals together with the text produced by the AI, we will be prevented from choosing the emotions we want to display. This is a moral problem that we still need to solve.”

It has been nearly a year since Scott-Morgan’s latest dramatic surgery and if his feelings are any indication, it was a success. “Statistically I should be dead, and according to the relentlessly depressing story of MND that everyone insists on pedalling, I should at the very least be feeling close to suicidal. But instead, I feel alive, excited, I’m really looking forward to the future. I’m having fun!” He said in the interview to Channel 4, attributing his bearing to his partner, his family, his medical crew, technology and good fortune.

“Contrary to the torturous scare stories about how it feels to be trapped in the straightjacket of your own living corpse, the brain moves on. It grieves a bit, and then, if you give it the chance, most of the time it forgets. Days may pass when I never once remember that in the past I could walk, or move, or (absurdly) even that I could talk. My brain has its own ‘new normal,’” he concluded.