Opinion

Where Everybody Knows Your Face

Researcher Dov Greenbaum examines how legislation affects facial recognition technology for better or worse

One of the hottest trends of early 2019 was the #10YearChallenge, a social media meme wherein participants post a recent image, contrasted with an image of the same subject, but taken ten years earlier. Commentators, from crackpot conspiracy theorists to The New York Times, speculated over a theory that the meme was orchestrated by Facebook to optimize their facial recognition technology. While denying any involvement in this meme, Facebook is not normally shy about its use of the technology, which it debuted almost a decade ago when it started suggesting names for people in your photos based on your collection of friends.

For daily updates, subscribe to our newsletter by clicking here.

While early attempts at facial recognition technology (FRT) measured facial landmarks to obtain simplistic pattern matches, this technology often failed unless the images were highly similar to begin with. Modern facial recognition technology, on the other hand, employs advanced artificial intelligence to find similarities in pairs of faces notwithstanding the aging of the individual, their particular posing, differing emotional expressions, and even the lighting conditions. Typically this is accomplished by creating manipulatable 3D representations of 2D images based on features that rarely change as we age. Matching is often an iterative machine learning process that gets better the more opportunities it has to learn: hence the #10YearChallenge conspiracy theory.

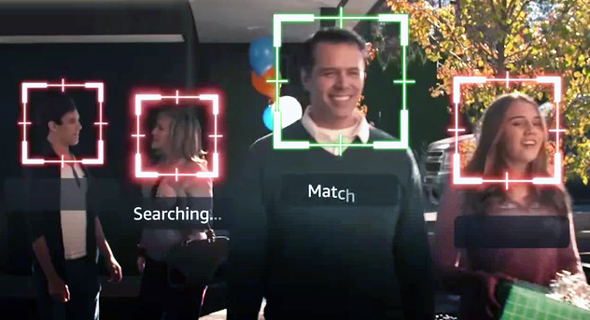

Facial recognition technology (illustration). Photo: ACLU

Facial recognition technology (illustration). Photo: ACLU

Amazon is also developing advanced facial recognition technology called Rekognition, even marketing it to police departments as a crime-fighting tool. In January, however, a study conducted by researchers at the M.I.T. Media Lab questioned the efficacy of Amazon’s technology, particularly when faced with females and people with darker skin tones. The study found that Rekognition misclassified women as men 19% percent of the time, with the number increasing to 31% when examining women with darker skin tones.

Last year, Joy Buolamwini, one of the researchers behind the recent study, posted a spoken word piece on YouTube, in which she demonstrated how various FRTs, including Amazon’s, fail to recognize women of color. In her video, Buolamwini showed various famous African-American women who the algorithms identified as male, including former First Lady Michelle Obama.

In another experiment conducted this past summer by the American Civil Liberties Union (ACLU), images of all 535 members of the U.S. Congress were tested against a dataset of criminal mugshots. Amazon’s Rekognition software, the same one marketed to law enforcement, returned 28 false positives, with an overrepresentation of black legislators. Constituting just 20% of congress members, people of color were 39% of those falsely identified as criminals by Amazon’s FRT as part of the experiment.

So, is Amazon’s FRT racist and sexist? Probably not intentionally. The above-mentioned deficiencies likely arise from the fact that most facial datasets skew strongly towards white men.

Amazon is not alone in developing FRTs, but unlike its peers, such as Microsoft and IBM, it has reportedly been less transparent with regard to the underlying technology and the associated concerns. Given these concerns, the city of Orlando, Florida, pulled the plug on its FRT police program with Amazon, only to later renew it in October. In January, a group of Amazon shareholders and employees went as far as demanding that the company stop selling its technology to the government.

All this notwithstanding, the sheer number of technology giants involved in the development of FRTs reflects a growing pervasiveness and usability of this technology across many sectors, not necessarily just in law enforcement—in January, Rio de Janeiro announced it will be employing FRT at this year’s Carnival celebrations in Copacabana; banks and retail stores have been using FRT to track their customers (although the ACLU has found that they often won’t admit to it) and even to discern ethnicity; similarly, schools and workplaces are using FRT to track their students and employees; and even churches have reportedly begun to use the technology to track attendance at their events.

Despite their pervasive use, FRTs and other biometric tools are, for the most part, weakly regulated in the U.S. There are only a handful of states that have laws relating to FRT privacy concerns (but at least seven additional states are considering implementing laws).

Privacy advocates are strongly pushing for additional regulation and legislation, pointing to the relative immutability of the data collected. If an identifying string of digits—like a driver’s license, for example—of yours is stolen or misused, you can simply apply to get a new string of identifying digits. On the other hand, if your face scan and fingerprints are misappropriated, there is no obvious recourse to deal with the data and privacy breach—it is much harder to change your face.

Perhaps the most prominent among existing laws is the Illinois Biometric Information Privacy Act that passed about a decade ago. Among other provisions, the law requires that companies obtain consent from each individual from whom they capture biometric data. The law also provides for statutory fines for each violation.

There has also been substantial lobbying to weaken whatever laws do exist. The lobbyists point to the billions of dollars in potential liability for companies like Facebook and Google that use FRT in many innocuous and consumer-friendly applications but are now facing dozens of class actions lawsuits as a result. The outcomes of the lawsuits are mixed, potentially further chilling innovation in the field, simply for lack of clear guidance one way or the other.

For example, in a ruling filed at the end of 2018, a Chicago district court found that Google had not caused the necessary harm to violate the law through its popular photo app and cloud-based service that employs FRT to classify faces in photo collections. However, in a 2016 case, now being appealed, Facebook was found to be in violation of the law because of its tool for scanning and tagging images.

Early this year, amusement park chain Six Flags Entertainment Corp., lost an appeal in a 2016 case in which it was sued for collecting and retaining the biometric information of teenagers applying for season passes without following the statutorily prescribed protocols of the Illinois law. While the lower courts sided with Six Flags that there was no discernable harm or actual injury to the teenagers, the Illinois Supreme Court unanimously ruled in late January that simply violating the teenagers’ rights under the act qualified as the necessary harm, even without any actual harm, and as such, Six Flags is potentially liable for simply the collection of the data.

- Does the Right to Be Forgotten Mean Never Having to Say You’re Sorry?

- Can a Golem Have a Birthday and Other Questions of Legal Personhood

- Is Space the Final Frontier for Copyright and Patent Law?

Because of these and other cases, many technology companies have withheld some of their services in Illinois and Texas, which has a similar law: Google Arts & Culture app, which matches a user’s selfie with famous paintings by way of FRT was geoblocked out of these jurisdictions.

While privacy advocates see these cases as a significant win for the consumer, we will likely see a growing number of fun applications being geoblocked for residents of states with strong biometric laws. So to find out whether the #10YearChallenge really is a shady Facebook effort, just see if it is available in Illinois.

Dov Greenbaum, JD PhD, is the director of the Zvi Meitar Institute for Legal Implications of Emerging Technologies and Professor at the Harry Radzyner Law School, both at the Interdisciplinary Center (IDC) Herzliya.