PoV

To Err Is (Not Necessarily) Human

Researcher Dov Greenbaum writes about Yom Kippur, The Hitchhiker's Guide to the Galaxy, and how artificial intelligence is becoming more human-like

Similar to Yom Kippur, the Jewish day of atonement which, coincidentally, also takes place this week, Adams' book highlights our relative insignificance within the entirety of the universe. Carl Sagan famously described Earth, as seen in the iconic image taken by the interstellar space probe Voyager 1, as a "pale blue dot." Similarly, Adams portrays Earth as nothing consequential in the vastness of space: his book begins with Earth being destroyed simply to make room for a new intergalactic highway.

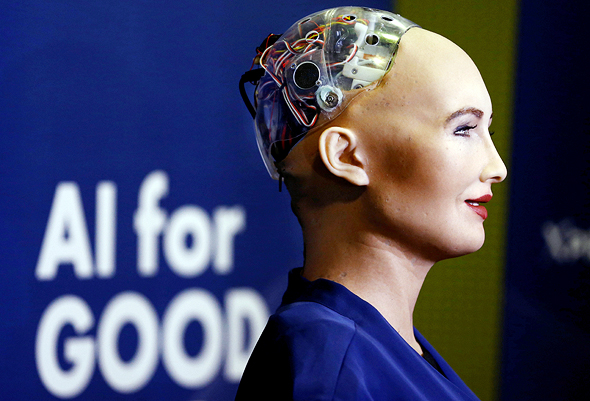

Humanoid robot Sophia. Photo: Reuters

Humanoid robot Sophia. Photo: Reuters

Yom Kippur also highlights the frailties of humanity, and notably, Adams wrote human weaknesses into his fictional artificial intelligence robots. To wit: Marvin the Paranoid Android, a robot aboard the spaceship carrying the book's protagonists, is depressed and bored. Acknowledged as one of his memorable performances, in the 2005 film adaptation, Marvin is voiced by the late great Alan Rickman.

Adams was particularly percipient in his incorporation of humanity into AI. While we often see AI as a tool for reducing problematic human-like qualities such as biases and human errors, many researchers and companies are now specifically aiming to introduce human-like characteristics into AI algorithms.

Consider Google Duplex, the AI assistant that incorporates human inflections, hesitations, and pauses into its speech to make it sound more human-like. Here Google engineers supposedly thought that people would be more comfortable interacting with the technology if it sounded more like them. It is important to note that, while Google's assistant may sound more like a person, it is not human-like in any other way. Nevertheless, the distinction between sounding and being human-like was disconcerting for a large demographic who found the technology to be viscerally unsettling, like the uncanny valley of computer-generated images that are nearly real, but not all the way there. Soon after its first demonstration, Google decided that its AI assistant would henceforth provide notice that it is, in fact, artificially human-like, so as not to seem deceptive.

Other programmers have pushed past the concept of human-like past appearances and into the programming itself. In the field of autonomous cars, where one of the universally stated goals is to reduce accidents caused by human mistakes, developers are writing human-like aggression into the code so that the vehicle can better adapt to infrastructure, match the excessive speeds of non-autonomous vehicles on the highways, and not get stranded at a stop sign when human drivers refuse to give the right of way. Tesla already has at least one aggressive driving mode for its autopilot system, dubbed Mad Max, and is considering a second.

In some instances, even human-like mental states can be programmed into an AI. For example, U.S. patent 8996429 describes a robot featuring a selection of personalities to choose from. I'm hoping that this is similar to Amazon's intent to introduce a new Alexa assistant that sounds just like Samuel L. Jackson with all of his personality.

Some researchers have even recently speculated that AI algorithms that mimic human thinking might, like humans and the aforementioned robot, Marvin, become depressed and even hallucinate. Neuroscientists are hoping that by studying biomimicking depressed AI, we can better understand and treat human depression. Similar efforts are directed at seeing whether AI can also develop other human-like qualities like musical rhythm or an appreciation of causality.

However, not all instances of human-like AI are intentional. For example, an AI deep neural network recently developed a human-like intuition for an abstract appreciation of numbers. In one recent experiment, AI algorithms learned to employ human-like skills in avoiding being caught by a seeker in the very human game of hide-and-seek.

But not all unintentional developments have been positive. In one example, Google's AI learned human behaviors such as betrayal in lieu of cooperation in prisoners' dilemma like situations. Another example, CIMON, a robot developed by Airbus and IBM, which spent more than a year on the International Space Station, developed an attitude, reacting like a spoiled child during a live demonstration of its abilities. Further, it was just recently revealed that Taylor Swift threatened to sue Microsoft over trademark infringement for Tay, a twitter-bot that, designed to learn how to tweet from reading other tweets, quickly became a racist, homophobic bigot.

- In Judaism, One Must Work for the Right to Be Forgotten

- Uber as a Symptom of the Ills of the Gig Economy

- Deepfakes Are Good for More Than Just Manipulating Voters

Of course, the more human-like AI becomes, for better or worse, the more likely we are to consider the possibility of providing machines and algorithms some form of legal personhood, and all of the rights and liabilities that this entails.

Perhaps the possibility that AI will pick up negative traits will actually further propel society into considering AI rights. As the famous English poet Alexander Pope noted, "to err is human …" Still, human-like, erroneous, and legally endowed AI is likely at least a decade away. In the meantime, have a meaningful Yom Kippur, and remember the rest of Pope's text. "To forgive, divine."