“A coalition of hate”: The online extremists that are testing Big Tech

Hate speech has been bubbling on social media and the internet for decades - but it all came to a climax this month at the heart of America’s democracy

As far-Right extremists stormed the nation’s Capitol at the start of the month, it was the accumulation of weeks and months of online hate activity across multiple social media platforms. Dr. Gabriel Weimann, a professor of communication at the University of Haifa, has been studying the patterns of extremists and terrorists online and offers some insights on what can be done to tackle the rise of hatred on the internet.

“It was clear - the writing was on the wall and in many of our studies we just presented the wall we saw,” he told CTech. “We saw many walls, and we saw violent writings on all of them.”

The offline consequences of online hate came to a climax earlier this month when thousands of right-wing extremists and Trump voters who saw themselves as disenfranchised stormed the U.S Capitol. Initially meeting to ‘Stop the Steal’ of the U.S Presidential election by Joe Biden, the various groups violently protested, some while wearing racist, anti-Semitic, and inciting clothing. One man was photographed wearing a sweater that said ‘Camp Auschwitz: Work Brings Freedom’.

“It has become now a Coalition of Hatred,” Professor Weimann continued. “This is what I find more alarming than anything else. It’s not a single group and it’s not a single hatred… You have multiple groups all very different from each other. Some are focused on hating immigrants, some are focused on how Trump was treated, some hate Jews, Blacks, Gays, or women.”

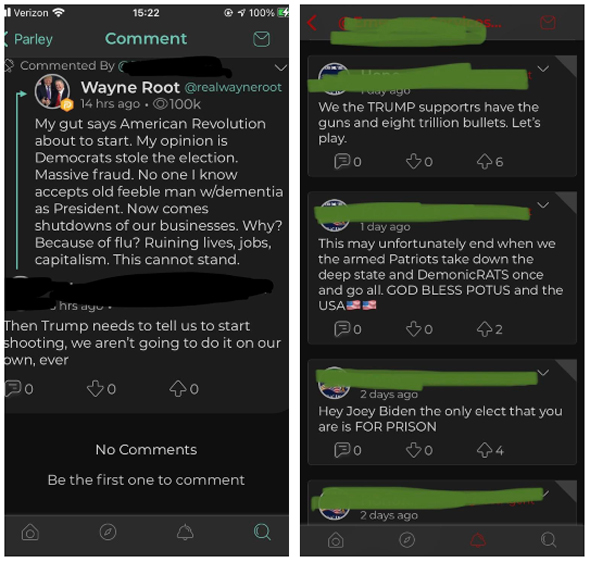

A common thread among those who marched in the name of hate and perceived injustice was that they would meet in fringe online communities hosted on platforms such as 8Chan or Parler. These platforms, that pride themselves on enabling free speech and unfiltered communication, are allowing users to “cross a red line” from hate speech to terrorism and inciting violence - something that Professor Weimann explained is a new trend in his research.

“For many years, I was studying online terrorism. Our definition of terrorism includes the threat of violence. Violence had to be part of it,” he said. This means that, for the decades of research, his threshold of hate speech and inciting acts of terrorism relied on the call for violence and not simply words that some found offensive. As years went on, those goalposts began to shift and rhetoric became more intense and hateful, particularly as access to online discussions became easier than ever before.

Today, social media platforms have evolved to become the online equivalent of public squares where citizens can engage in free and open dialogue - sometimes leading to deadly results. “In the last few years things have changed, because those groups that enjoy the freedom of speech or pushed people to the edge, crossed the red line, and once they started shooting people in synagogues, mosques, and now Capitol Hill, it became clear that you are looking at domestic terrorism.”

The Capitol Hill riot and its tragic consequences resulted in the second impeachment of President Trump after Congress charged him with ‘incitement of insurrection’. However, Professor Weimann believes that the actions taken that day were long in the works, and perhaps had been planned for some time.

“The online platforms and social media played a crucial role in forming, in channeling, and uniting these groups and finally directing them to the same place to take this chance to be violent,” he said. “All these calls to shoot, and fire, and be violent were all there. And not just on the last day. It was there for weeks. It could be identified… the alarm bells were ringing but nobody listened.”

One week after the riots, CNN reported that investigators were pursuing signs that the attack on the Capitol had been planned in advance, only hours after the impeachment had already taken place.

For years, social media companies and Big Tech have been put under pressure by advocacy groups that hope to curb some of the violence and hate speech they allow on their platforms. Facebook and Twitter banned Holocaust denial on their platform as recently as October 2020 - and yet three months later a man wore an Auschwitz sweater at the front gates of American democracy. Even as social media companies rush to recategorize Holocaust denial as hate speech and finally remove some of the images and hashtags that plague online forums, for Professor Weimann it is a futile gesture.

“The idea you can block the voices of hate and drive them away is a naive one,” he told CTech. “Anyone who thinks Facebook removes them and Twitter removes them and then they’re safe, is naive.”

The spread of hate online goes beyond the hate speech that runs rampant on their platforms, but in the goods and services that can be purchased online, too. After the image of the Auschwitz sweater went viral around the world, the ADL (Anti-Defamation League) and the World Jewish Congress (WJC) called on companies that operate online marketplaces to “strengthen and better enforce policies banning any product that promotes or glorifies white supremacy, racism, Holocaust denial or trivialization, or any other type of hatred or violence.”

“Online retailers have profited directly from the sale of these items, and have indirectly facilitated the spread of hateful ideologies, allowing extremists to proudly express their racist, sexist and anti-Semitic views,” added Jonathan A. Greenblatt, CEO of ADL.

As long as there are advocates willing to hold Big Tech accountable, they will continue to try to tweak their policies to make sure hate does not spread again. But it is not a practical solution to the problem, and it raises ideological questions as to who controls the voices on the loudest platforms in the world - especially if they can silence the President of the United States without consent or oversight.

“Do we want private companies to play the role of political sensors? Do we want the Mark Zuckerbergs of the world to decide what should be on and what not? Do we want them to decide who the terrorist is and who is not? I’m not sure. This is not democracy... certainly not the way I see it,” Professor Weimann said.

What is becoming clear, at least in the eyes of Professor Weimann, is that a series of steps need to be taken to tackle the coalition of hate, which he defined as intense monitoring of the content and holding social media companies accountable or establishing counter campaigns to tackle the ‘virus of hate’ from the users themselves.

“You need a combination of countermeasures. Some of them are passive, some are aggressive, some are defensive and some of them are offensive. There is no 100% solution. We can never get rid of hate messages and calls for violence but we can fight back - and certainly fight back better than we have so far,” he concluded.