“We are in an AI arms race, and today deepfakes are the new AI cybersecurity threat”

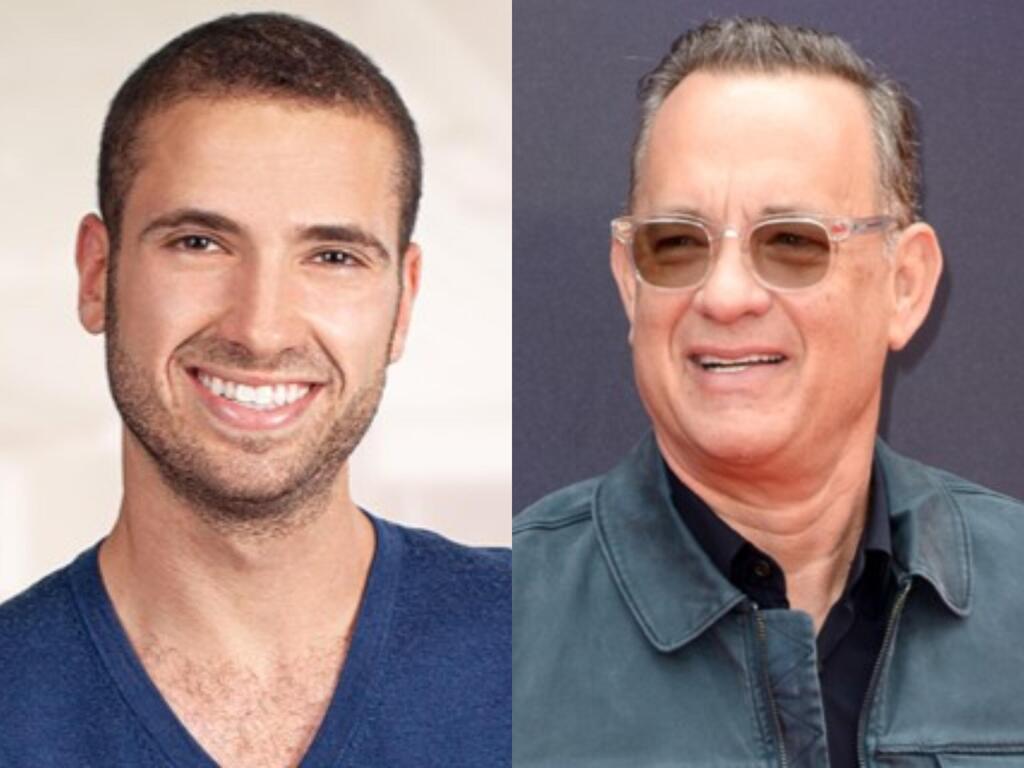

According to Michael Matias, CEO of AI cybersecurity startup Clarity, the instance in which scammers used Tom Hanks' image to create a fake ad is only the tip of the iceberg. Just like the emergence of network and application security, we now experience an exponentially rising attack vector of social engineering and phishing

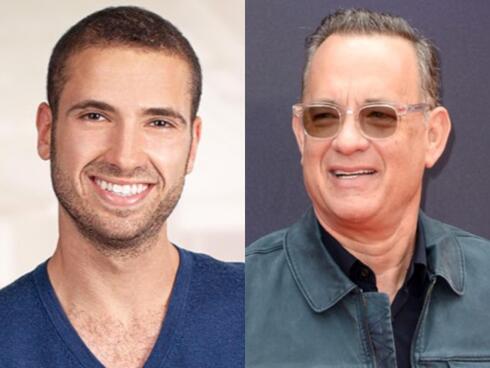

In an almost perfect timing, as if written by Hollywood screenwriters, actor Tom Hanks warned his fans this week about a deepfake commercial video of him that was distributed online. The video featured a synthetic character of Hanks allegedly advertising a dental insurance company. The actor's warning came just hours after the historic agreement was signed between screenwriters and actors and major studios - to regulate the use of AI in the entertainment industry.

As we take another giant leap into the digital age, the deepfake threat is gaining strength. Deepfakes are not new, and hostile actors have been using them for years. But now, with the rise of AI tools and the spread of software and applications that use them, we are facing a wave of new and more sophisticated deepfake threats in huge quantities. These AI-powered videos and audio recordings can be so realistic today that it's hard to tell them from the real thing. Deepfakes are already being used to spread misinformation and to damage people's reputations, and they're only going to get more sophisticated in the future.

Deepfakes are not just about imitating celebrities or tampering with media for dubious gains. They pose a serious threat to financial systems and societal norms. A chilling example from Retool, an IT company, underscores this threat. In a targeted cyberattack, a hacker used AI to deepfake an employee's voice, successfully breaching the firm's defenses and compromising 27 cloud customers. The hacker's knowledge extended beyond voice imitation to include the office layout, employees, and internal processes, demonstrating the depth of reconnaissance and sophistication of these attacks.

"Deepfakes are a cyber threat; just like the emergence of network and application security, we now experience an exponentially rising attack vector of social engineering and phishing,” explains Michael Matias, formerly an officer at the IDF’s 8200 cyber unit and current CEO of Clarity. "We are in an AI arms race. Detecting deepfakes demands constantly adapting to sophisticated technology. This is why we prioritized AI experts from the 8200 cyber unit. It takes an AI cybersecurity company to address this challenge”, he stresses.

As an AI cybersecurity company, Clarity's system comes to protect against new social engineering and phishing attack vectors arising from the rapid adoption of Generative AI and deepfakes. The company is building the trust layer for digital media and communication, by detecting AI manipulations in videos, images and audio. The company was founded by former officers at the 8200 unit and AI experts, alongside professors from Stanford and MIT, under a deep concern for the safety of global democratic institutions and the future ability for people to trust what they see and hear.

According to Matias, such is the extent of this issue that reports from Bloomberg and HSRC show that the deepfake detection market is surging at an astounding 42% CAGR, cementing its position as one of the fastest-growing markets today. Tracking the surge of deepfakes, Clarity and the World Economic Forum both see a staggering 900% increase in deepfake videos online annually. By the close of 2019, an estimated 15,000 such videos existed, and today, this number leaps into the millions.

Gil Avriel, Chief Strategy Officer of Clarity and a former executive with Israel's National Security Council, amplifies this sentiment, warning, "Deepfakes are not only a threat to financial systems; they undermine trust in our senses and have the potential to disrupt the stability of democracies worldwide."

As deepfakes grow more sophisticated, the line between truth and deception becomes increasingly blurred. These digital doppelgangers, armed with startling realism, challenge even the most advanced defense systems. As Matias points out, "The largest enterprises in the world are seeking solutions. It’s not trivial for a young startup, but the threat of deepfakes is palpable. We’re in the right place at the right time.”

Celebrities, with their vast followings, find themselves at the epicenter of this storm. From CBS News' Gayle King and revered actor Tom Hanks to the popular YouTube figure, MrBeast, artificial renderings of their personas are being maliciously used in false promotions. As Tom Hanks expressed, the potential for AI to allow fabricated versions of public figures to proliferate is unnerving. Yet, the arena of celebrity image misuse is merely the tip of the iceberg.