“When you raise walls around data, employees go around them”

The rise of Shadow AI pits corporate security against workplace reality.

Cybersecurity is the tech industry’s longest-running game of cat-and-mouse: when new threats to data security arise, new controls are put into place to stop them, which in turn leads to the appearance of more sophisticated security threats, and so on. As organizations continue to adopt the use of AI, that classic push-and-pull security struggle has resurfaced again.

“Shadow AI” is the emerging term for the idea that employees are prone to defaulting to whatever AI tools are the most accessible and convenient (even at the expense of security or privacy). The use of these tools often stems from a desire to get more work done more quickly, but as you can imagine, that could lead to some data security issues.

Many employers are less than excited about their proprietary code or IP being copy/pasted into publicly-accessible AI platforms that they can’t appropriately keep secure. As a result, they are investing into so-called “Private AI” systems, which are designed to contain data and reduce risk.

These trends co-exist, creating a sort of tension between employers - who want to keep their data locked down tight by controlling their employees’ AI usage - and their employees - who want to use the best, most advanced tools to accomplish their work.

“When you raise walls around data, then that actually gives employees an incentive to go around those walls,” says Professor Eran Toch, an expert on privacy and human-AI interaction at Tel Aviv University.

“Employees face a lot of stress, as all of us know. They live in a very competitive environment, and for many types of workers, generative AI is really an extreme boost for productivity. So when organizations try to limit certain sorts of uses, that actually gives an incentive for employees to gain some competitive advantage by using shadow AI. And that creates new security vulnerabilities. It's a wicked paradox,” he explains.

Generally speaking, there are two approaches to implementing Private AI (each with its own issues): you can either create a proprietary, in-house AI tool for your employees to use instead of publicly-accessible tools; or you can monitor employees’ use of those public tools to make sure that no critical data is being exposed.

4 View gallery

Professor Eran Toch, School of Industrial & Intelligent Systems Engineering at Tel Aviv University.

(Photo: Tel Aviv University)

Toch expresses his problem with the first approach bluntly: “It’s always worse.”

“No matter what the vendor tells you, nothing beats the latest versions of Claude and Gemini and ChatGPT,” he says, explaining that these proprietary platforms are often just a modified version of Meta’s Large Language Model Meta AI, which he refers to as “a dressed up LLaMA.” Toch argues that, even though they may be well-developed, they still pale in comparison to cutting edge models that are frequently being released to the public.

One of the key challenges with the second, monitor-focused approach to Private AI is that making sure that employees don’t leak sensitive data while using public AI tools is actually really, really hard to do.

Stopping leaks before they happen

“Shadow AI is there. We can’t avoid it. But we give you the tools to at least have visibility and control to know what's being done in your company,” says Yoav Crombie, CEO and co-founder of Private AI startup AGAT Software.

“As a person who has been developing software for more than 20 years, it's a much bigger challenge technically than what it appears on the surface.”

Elaborating on AGAT's approach, Crombie describes an infrastructure system that can be fine-tuned to the variable security needs of a given company, based on the level of strictness they require.

“They can say, ‘I'm okay with using ChatGPT, but I just want visibility and control,’ or the same client could say ‘for some use cases, it's okay to use ChatGPT, but if you want to connect it to your payment system and get insights, maybe I don't want to connect it to the outside world in any aspect,’” he explains.

While keeping individuals from leaking important info to public AI tools is hard enough, that challenge only increases once AI agents enter the picture.

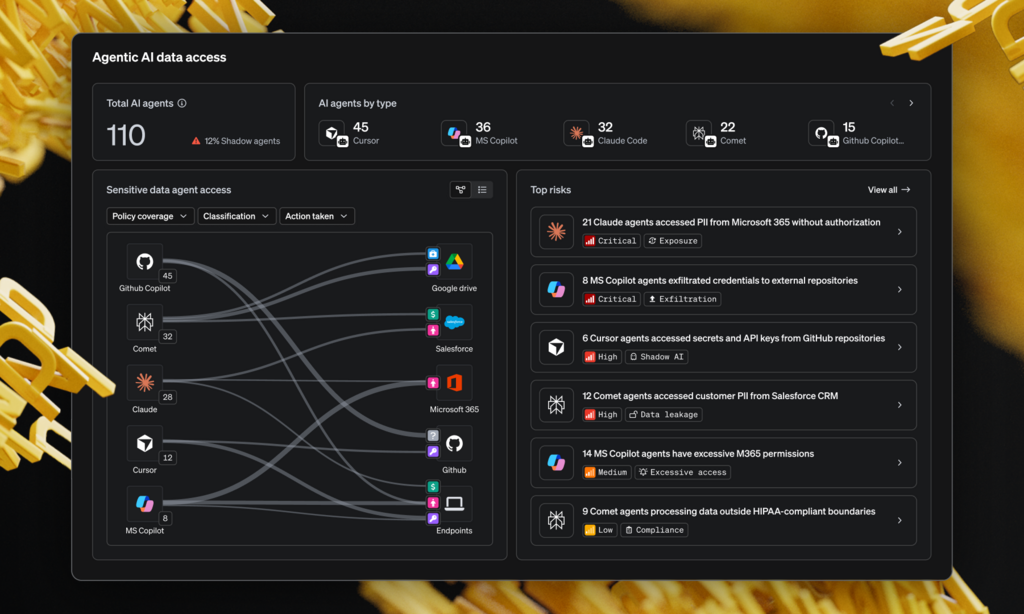

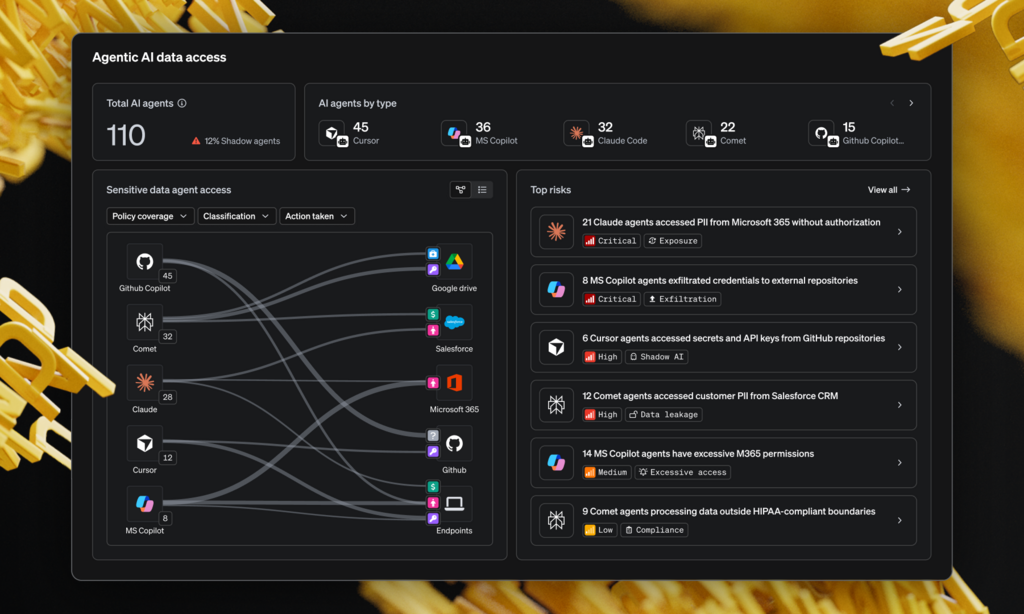

Eran Barak is founder of MIND, another Private AI vendor that places a particular focus on identifying and controlling agentic AI activity within organizations’ ecosystems.

4 View gallery

Yoav Crombie, CEO and co-founder at AGAT Software (Left); Eran Barak, CEO and founder at MIND (Right).

(Photos (L-R): AGAT; Ohad Kab)

“Traditional security tools are almost obsolete these days because they’re based on the assumption that ‘Zachy’ is one identity. Today, ‘Zachy’ now has like another 100 small AI agents operating under his name,” Barak says.

MIND’s platform is designed to surface and govern that agent activity, which Barak describes as a growing and underappreciated risk in enterprise data security.

“The problem is that these agents are not just responding to you, they can act on your behalf. They can scrap data, they can summarize it, they can do so many things in your name, and you won't even know,” Barak explains.

In practice, platforms like AGAT's Pragatix and MIND do not try to stop employees by “watching their screens.” Instead, they operate as AI-specific control layers that sit between employees and public AI tools. When an employee (or one of their agents) attempts to send sensitive material to a tool like Claude, the request is intercepted before it reaches the external model. The system inspects the prompt in real time, checks it against company policies, and can block or redact material classified as proprietary or high-risk.

Both companies said their systems are designed to minimize data retention, keeping only limited metadata, such as file paths or access context, while redacting or anonymizing sensitive content so risks can be flagged without broadly storing underlying data. The result is a system that can identify and act on high-risk data without holding on to the data itself.

4 View gallery

MIND’s new AI agent mapping feature lets companies put tailored guardrails around employees’ agentic AI activity.

(Photo: MIND)

Even with all the extra measures being taken to balance data security with employee privacy, though, there’s still a fundamental kink in surveillance-oriented approaches to Private AI, according to TAU’s Toch.

“Surveilling employees will always have a limit. It's extremely hard to just fence in employees today when they can, you know, take an image of something with their iPhone and just send it to Gemini or to ClaudeBot at home,” he says.

In other words, the problem is not just technical, but structural. Once organizations accept that determined employees will always find a way around controls, the focus shifts from outright prevention to managing behavior.

Crombie is open about this idea: “As a vendor in the cyber industry for many years, I strongly believe in education more than just blocking; because whenever you block an employee, they can find another way. It's more important to give constructive feedback for the user to know, ‘hey, you're doing something that may pose a risk to the company’ than simply blocking you violently and disconnecting you from the network or something like that.”

That approach may reduce friction, but it still assumes that close observation is the price of safe AI use at work. For Toch, though, it’s not just an issue of whether or not you’re being too harshly corrected - it’s an issue that’s much less practical, and much more human-centric.

“Sometimes employers don't understand, but employees do need privacy. They do need to protect the way they work from their bosses,” he says.

It boils down to trust

Research from Toch’s lab in TAU's School of Industrial & Intelligent Systems Engineering suggests that monitoring changes behavior in measurable ways.

“We're talking about people who we need to be creative - programmers for example - and when people are monitored, they conform to certain aspects of the work, but they really lose the voluntary and creative way they do things. And that has its own cost,” he says. “You might gain some control, and you might gain some privacy for your customers, but you harm the privacy, the freedom, and the autonomy of your employees.”

Rather than treating Shadow AI as a discipline problem, Toch argues that organizations need to rethink how they relate to their workforce.

“The way to do it is to think about the relationship with the employees,” he says. “You can reason with employees on what they do and don't do with Shadow AI. And if you don’t surveil them like children, but actually count on their decision making to use generative AI in certain ways and not others, they might make better decisions.”

That, he adds, is not a radical departure from how companies already operate.

“Employers trust the decision making of employees all the time. It’s naive to think you can discipline and surveil employees constantly. It just doesn’t work if people have options and a certain level of autonomy.”

Private AI systems and data protection tools, Toch stresses, still have a role to play. But they cannot substitute for trust.

“I’m not saying private AI is useless - it’s not. And I’m not saying data protection systems are useless - definitely not,” he says. “But all of those things should happen alongside conversation with employees, and in a non-binary way of doing things.”