When artificial intelligence meets sexual fantasies

With sites dedicated to AI-generated pornography, customized to a user's preference, and images based on real women, the way AI is being used for porn is raising new concerns about sexual exploitation, consent and misogyny

LoRA is not unlike a Barbie doll. Only, instead of a limited number of plastic parts to play with produced by the manufacturer, with LoRA the variety is endless, limited only to what words can describe.

LoRA Sex 008, for example, is a young, white, rainbow-haired woman straddling a white man in a sexually explicit pose. Her image has been downloaded by 33,375 users in the past four months, even though it included the warning "Beware, stable diffusion (a deep learning model that turns words into text) is not good at creating hands, it’s been tried many times." Another LoRA model was downloaded 47,686 times and received a rating of 4.9 stars, even though the creator warned that she "doesn't always connect to a person, so it's better to try to avoid full body instructions".

These warnings are important since writing highly precise prompts is a fundamental condition for working successfully with any generative AI models. In this case, the guidelines must be precise so that the generated outputs appear in poses or perform actions exactly as users imagine them.

LoRA (Low Rank Adaptation) is a sort of basic template for text-to-image generation. The term, described by researchers at Tel Aviv University in an August 2022 article, allows users to visualize "specific unique concepts, change their appearance, or place themselves in new roles and scenarios" using a small number of images.

LoRA has created a unique moment in the pornography industry, where expertise is no longer a barrier, and anyone can fulfill any fantasy they can imagine. All it takes is a little time, five or six images, and you have a customized digital sex doll for your use – a woman with any face you desire, skin tone, eye color, or body type personalized to the user's taste.

Digital sexuality

This may sound complicated, but luckily for the creators of AI-based pornography, it's incredibly convenient. Reddit communities collect images, open-source code allows for the creation of explicit images at no cost, and platforms readily host them. All of this data is consolidated in a thriving community whose members don't keep much to themselves. They consult with each other over how to create "LoRAs," which text-to-image generators to use, how to adjust pelvic poses or bust sizes etc. They utilize collective wisdom to identify realistic inaccuracies, debate the boundaries of good taste, and whether such boundaries exist at all. In Discord forums, discussions include how to make the text-to-image generator undress or get dressed, or how to train a model with just a few low-quality images.

The process requires the repetition of various experiments, a delicate tuning of commands given to the model. They adjust poses, colors, levels of explicitness, the number of visible body parts, hair color, size or placement of sexual organs, and accessories and clothing. Precise adjustments can be made with as few as five or six images of your choice. Some users offer paid services for those who struggle or lack patience, while others accept donations. Valberry, focusing on Korean pop singers, offers a personalized LoRA for $3.75. Another creator maintains a Patreon page named Uber Realistic Porn Merge, where users can pay from $3 to $12 per month, to get "access to everything I'm working on as it is tested."

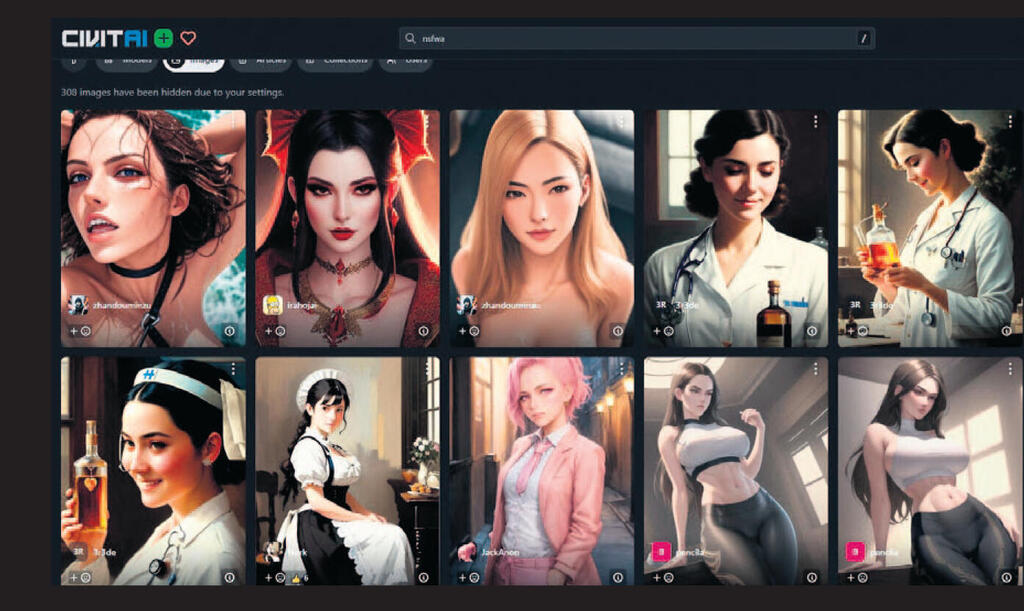

Most of this content creation happens on CivitAI, a site for custom AI models, colloquially known as "Stable Diffusion for Perverts." Founded in January, CivitAI has rapidly become a leading platform for personalized pornography models. Even though it contradicts the company's terms of service, the site includes numerous LoRAs based on real people, with their images taken from the internet without consent.

Shockingly, if you have publicly available Facebook profile pictures, you could become a LoRA too. You can be placed in specific poses, wearing clothes or not, against a starry sky or by the seashore. "These images are taken from public Reddit images (e.g., r/innie) and do not violate any terms of use. Please do not remove," says the creator of one of the models, which has been downloaded 24,093 times since its creation in June.

Those who upload LoRAs to CivitAI provide free access to anyone who chooses to use the same custom model. If the LoRA is based on a real person rather than a synthetic creation (of which there are many) the creator grants users unrestricted access to do what they want with that figure. A LoRA does not necessarily have to include specific facial features of a real person but can represent specific body parts. A third user can take multiple LoRAs, combine them, and add a row of prompts to generate a new image.

Designing LoRAs and creating a personalized image requires an extraordinary level of detail. One LoRA, for instance, created last May and downloaded 4,159 times, includes a list of positive prompts, including "woman, blonde, wide open blue eyes, bare neck, tattoos, very large breasts." Alongside these, there are negative prompts (what the user does not want to see) like "poor quality, mutations, duplicate body parts, bad anatomy, birthmarks, watermarks, username, artist's name, child, old person, fat, obese."

"They see [the image] as an object," says Dr. Yaniv Efrati, Head of the Behavioral Addiction Lab at Bar-Ilan University's Faculty of Education. "They discuss what it should look like, accuracy and realism. It’s approached from a technology, innovation and creativity-focused standpoint which allows users to distance themselves from the moral discussion, which would force users to ask themselves whether what they’re doing is appropriate, respectful or right, if they’re doing something wrong or objectifying."

Shulamit Sperber, a licensed sex therapist and a member of the medical team for sex therapy at Reuth Medical Center and Ishi clinic, offers another perspective. "Some see this as a new form of digital sexuality or people whose sexuality is connected to digital means. For them, technology is significant for their sexual identity."

Pornography as a technological accelerator

The pornography industry is known not only as a testing ground for new technologies but also as a pioneer in their adoption. Pornography tapes drove the adoption of VHS technology in the video industry. At that time, Hollywood was concerned about piracy and avoided promoting home video recording devices. However, the porn industry harnessed technology to reach new audiences. By the late 1970s, more than half of VHS tape sales were pornography. Later, the industry embraced DVD technology, allowing consumers to skip to their favorite scenes. The porn industry has played a pivotal role in shaping the way people consume and pay for content online. It was one of the first industries to generate significant revenue from the internet in the 1990s, introducing the concept of paid subscriptions and online payments.

In recent years, the dominant point of discussion involving pornography and technology has been regarding deepfake, the ability to implant or replace facial features in video clips. Today, about five years after the widespread adoption of this technology, 96% of all synthetic videos on the internet are sexual, according to the Dutch company Sensity, which monitors such content. Many of these videos feature women who did not consent to the use of their image. There are now porn websites dedicated solely to this type of content, categorized by celebrities such as Emma Stone, Scarlett Johansson, Emma Watson and even U.S. Congresswoman Alexandria Ocasio-Cortez. Among them, MrDeepFakes, a popular deep fake pornographic website, attracts over 17 million monthly visitors and uploads more than a thousand videos each month.

Now with the advent of AI, there's no need to settle for recycled materials; you can create new ones. The dominant AI model for this purpose was OpenAI's DALL-E2. Open source models, such as Stable Diffusion, have become excellent at specific tasks after targeted training by users. For example, they can incorporate images of specific people in specific poses or with specific graphics. When Stable Diffusion was launched, primarily men understood its potential, and in the early months, most of the images and models uploaded to the platform were related to "Hentai," a Japanese sexual animation genre. The situation was so severe that the company had to release a Twitter statement: "Don't create something you'd be ashamed to show your mom." Unfortunately, this did not deter users.

A perverted dystopia

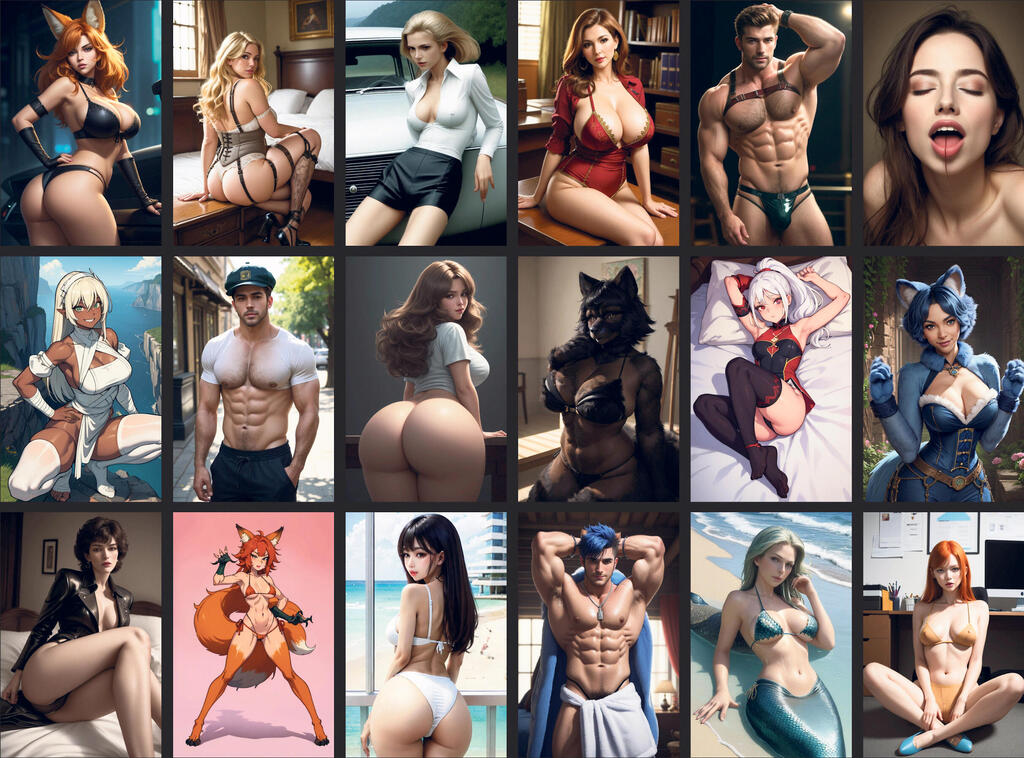

The body of a naked woman is placed in an unusual position. It's not just the impossible pose but the entire body that seems distorted. The waist is unnaturally narrow, and the abdomen muscles are emphasized. The chest is of remarkable proportions, the hips muscular, and they are all covered with a thin layer of fur. The face isn’t visible; only the neck can be seen, giving the impression that the figure is an animal, possibly a wolf. These were the first images I encountered when I searched the internet to learn about porn created by generative AI tools.

I knowingly entered a Discord forum group used by Stable Diffusion users, called “furry-nsfw,” a community known for giving animal-like attributes to its characters. I thought that distancing myself from humans would make it easier, but I was wrong. I entered another, much larger group labeled “nsfw” (Not Safe for Work). I thought that a large community meant a broad consensus. I was wrong again. There were GIFs of young girls with adult female bodies dominating the content. One of them was partially nude and crying. In the "funny" nsfw group, a new collection emerged that made it unclear whether the distortions were intentional or part of the peculiar errors that AI tools sometimes produce: a woman with a double pelvis, another with breasts on her arm, or lips on her chest.

"Some might argue that as long as it doesn't harm any human beings, everything is fine," explains Sperber. "But what will happen to human sexuality when we become accustomed to this? Once personalization allows you to create your exact fantasy, what will happen to your sexual behavior in the real world?"

Some of the images created by text-to-image generators are unsettling. While many are illustrated, some suffer from realism issues. But some images look highly realistic, especially those based on real people. When browsing through galleries featuring unrealistic poses, improbable body parts, and extreme settings, it feels like you’re looking at something abnormal.

"When simulated reality becomes increasingly indistinguishable from actual reality, the result can be difficult and disturbing, even dystopian," says law professor Shulamit Almog from the University of Haifa. "As technology advances, becomes more powerful, and sees wider application, the dystopian aspect sharpens. And technology keeps advancing because economic interests are growing, and massive funding ensure its prosperity."

Child exploitation

If you don't have the energy to invest the effort and study to create your own LoRA, for a few dollars, you can purchase a personalized one. If you're not interested in creating images of people you personally know, there's an even more convenient solution for you: browse websites like Mega Space, where for $15 a month, you can place any celebrity in a sexual pose of your choice. "Create anything," they offer at the top of the site. The subscription also grants access to over 20,000 different LoRAs, many of which, a quick search reveals, are related to actresses from the "Star Wars" film franchise.

After Mega Space implemented a paywall, users turned to Reddit to voice complaints. Some argued that the paywall was designed to prevent the distribution of child pornography (CP). One user directed others to a paywall-free website called Dezgo, where you can create a new image of any celebrity or search for ones already created by users. These galleries include thousands of images, and some of them reveal personal obsessions (anime, school uniforms, bondage, Emma Stone etc.).

Meanwhile, others engaged in an inevitable discussion. "Doesn't CP, by definition, have to involve a real child?" wrote one anonymous user. "Don't get me wrong, it's gross and indefensible, but in my opinion, I'd prefer there be computer-generated images to satisfy the... *shudders*... needs of these people rather than an actual child being harmed." Another wrote, "'Child' is a vague term, especially when discussing fake people in fake pictures, and even more so when it's heavily stylized, like an anime drawing."

"Although it's a text generator, and there's no actual child involved," says Sperber, "children are still harmed because we know that the more you feed these fantasies, the more the perversion grows. The only way to address it is to avoid it. The approach today is to ban it, even if there doesn't appear to be a real child on the other end because it can lead to dangerous behavior."

Lack of consent

In the world of deepfake, the danger is clear and immediate, with many victims, especially women and children, being involuntarily involved in pornography through AI. This practice is violent, exploitative, harmful, and often illegal. Pornography websites and even search engines can help, even if it's not their primary focus to remove such illegal content from their platforms. They are often obligated to do so by law, and if criminal penalties don't deter them, the threat of civil lawsuits will. But what about artificial intelligence?

The issue is more complex. Various law enforcement authorities scan the internet searching for victims of the sex industry and human trafficking. However, it is now more challenging to distinguish whether it involves a real person or a synthetic creation, and whether the synthetic creation is partially based on a real individual. Where is the line when it comes to harming individuals, particularly in cases where an AI creation resembles a real person?

"There is certainly potential for harm here, even in cases where the creation is considered art or something innovative that blends existing elements with imaginary details generated by technology or algorithms," says Professor Almog. "Even when it is claimed that it is related to freedom of expression, it is harmful. It's common to argue that interference with such types of creation reflects an inability to connect with new technology and moral righteousness. However, if one examines the deeper dimensions of the phenomenon, there is something very old, traditional, about categorizing male preferences as widespread and non-harmful. And these new patterns connect with pre-existing ones, concerning how men in the heterosexual sphere consume sex and pornography. These patterns are deeply rooted in societal consciousness, that have been ignored or overlooked in the fight for gender equality. New technology only perpetuates them further."

First published: 09:16, 11.09.23