"AI as an Iron Man suit" : The double-edged weapon of cybersecurity

CTech spoke to five prominent cyber experts about how to prepare for 2026 - and what we’re already doing wrong to protect ourselves.

“Think of AI as an Iron Man suit; it enhances our strength, intelligence, and speed. But that same suit is now available to both defenders and attackers. Cybersecurity is entering a completely new era, and it’s a massive shift,” said Shay Michel, Managing Partner at Merlin Ventures.

As artificial intelligence migrates from novelty to infrastructure, the rules of cyber conflict are being rewritten. Attack tooling has become machine-augmented, defenders are racing to bind new modeled workflows into existing operations, and organizations that treat AI as a checkbox (rather than an attack surface) are being left exposed.

1 View gallery

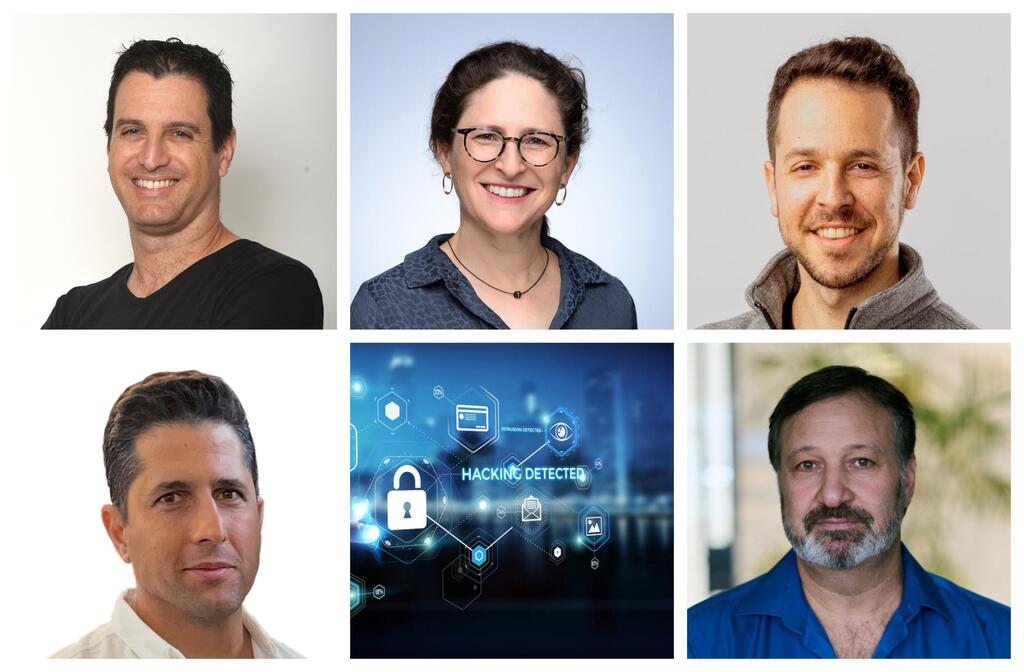

Nadav Avital, Aliza Israel, Hed Kovertz, Shay Michel, Nir Bar-Yosef

(Photo: MazeBolt, Micha Loubaton, Imperva, Jonathan Michal, Yaniv Elisha, Shutterstock)

In Israel, which has already seen record numbers of cyber attacks, these considerations are more relevant than ever.

Exposure now spans everything from smarter DDoS attacks to credential-based intrusions, powered by black-box AI tools and a growing cybercrime economy built on plug-and-play components.

In interviews with Aliza Israel, Senior Cyber Evangelist, MazeBolt; Hed Kovetz, CEO and Co-Founder, Silverfort; Nadav Avital, Head of Security Research at Imperva; Nir Bar-Yosef, Head of Government Cybersecurity Unit, Israel National Digital Agency; and Michel, one thing became clear: In 2025, the weakest assumption an organization can make is that protection equals readiness.

The illusion of DDoS safety

“One of the most serious blind spots today is the illusion of safety around DDoS attacks for organizations that demand business continuity,” says Aliza Israel, senior cyber evangelist at MazeBolt. She warned that while many firms deploy sophisticated mitigation tools, those tools are effective only if continuously validated. Networks evolve; misconfiguration creeps in; and point-in-time testing can miss what appears the next day. MazeBolt’s own research suggests sizeable exposure and, she argues, proves a need for nondisruptive, continuous testing on live environments to close the gap.

Her warning matches market data: “DDoS attacks surged almost a third (30%) in the first half of 2024 compared to the same period in the previous year. Moreover, DDoS attacks on critical infrastructure increased by 55% in the last four years.” MazeBolt’s trend reports underscore that volume is only one dimension, and visibility and validation are others.

Put simply, a firewall in place is not a guarantee. If protective rules or routing policies drift, or if mitigation is misconfigured, the business can still go offline, and discovering that only after an outage is exactly the false confidence she cautions against.

Identity: the new front line

“One of the biggest blind spots today is identity,” explained Hed Kovetz, Silverfort’s co-founder and CEO. “Organizations have spent years hardening their networks and endpoints, but the identity layer (the way access is granted and authenticated) remains fragmented and poorly monitored.” Kovetz described identity as fragmented across siloed systems: a landscape where attackers use legitimate credentials to move laterally instead of trying to ‘break in.’ “Credentials and access paths have become the primary attack surface,” he said.

That view is increasingly the orthodox one in enterprise security: identity is not a single control, but an ecosystem of accounts, service principals, APIs, and agents. When an identity (either human or machine) is over-privileged, attackers need only hijack that relationship to access wide swathes of value. Kovetz’s point is stark: defending identities now requires unified visibility, continuous verification, and enforcement that extends to legacy and SaaS systems alike.

The AI stack as an attack surface

Nadav Avital, senior director of threat research at Imperva, said: “The largest blind spot sits inside the AI stack itself.” His concern isn’t just that organizations deploy models, but that they do so without the same threat modeling and access controls they apply to code or APIs. Over-permissive agents, poor I/O validation and unmonitored east-west agent traffic, he warned, let sensitive data and actions move around without triggering traditional controls.

Avital argues the remedy is twofold: demand minimum transparency from vendors (model cards, SBOM/MBOMs, jailbreak, and PII tests) and take full control of the I/O by brokering every call through policy gates and canary tests. When models have live hooks into production systems, he says, those hooks must be treated as privileged conduits: enforce policy, monitor egress, and retain kill-switches.

Semi-autonomy today, hybrid attacks tomorrow

A recurring theme across the interviews was that the bar for full autonomy remains high, but hybrid attacks (AI at scale guided by human intent) are already the default. “We’re already in the semi-autonomous phase,” Avital observed. AI can orchestrate reconnaissance, craft phishing text, and even chain vulnerabilities into exploitation pathways; humans still often provide strategic improvisation and judgment.

Nir Bar-Yosef, head of Israel’s Government Cybersecurity Unit, affirmed that automation is embedded in defensive tooling (SOAR playbooks, automated response), but insisted human expertise remains central to threat research and nuanced decision-making. “The difference is that it will become much more efficient as AI technology continues to advance,” he said.

That hybrid reality is sobering because it combines two accelerants: accessible tooling that multiplies attacker reach, and human attackers who direct and refine the machine’s energy. This year, Europol and other agencies have flagged this trend: organized crime is adopting AI-enabled modus operandi for scale and deception. The result is not a single super-AI attack, but thousands of adaptive, targeted intrusions launched by semi-autonomous fleets.

Black boxes, trust, and vendor risk

A second practical problem, and one the interviewees treated as urgent, is the “black box” vendor ecosystem. Shay Michel of Merlin Ventures elaborated: “You can’t fully trust what you can’t see. We’re shifting from a world where we used to inspect the code and the product to one where we must understand how the model and agent actually behave. That’s a major mindset change, and it will take time.”

Israel offered some similar observance: “You can’t protect what you can’t validate. Black-box security tools often obscure whether they’re actually doing the job. That’s why continuous vulnerability testing is essential across the entire network attack surface,” she said.

Kovetz echoed that position: assume third-party AI tools are untrusted until proven otherwise; enforce identity-based access; and instrument every API call. “At a minimum, companies should demand clarity around data handling, model training sources, and decision logic.”

Avital suggested a concrete architecture: broker all model traffic through an I/O control plane that enforces filters, monitors data egress, and supports policy mediation. This broker model lets firms avoid blind trust while still leveraging third-party capabilities: If you let an AI model read or act on sensitive data without governance, you have effectively outsourced your security perimeter.

A final warning

Aliza Israel’s closing point is blunt and operational: “Don’t rely on unvalidated protections.” Passing an audit or having a best-of-breed stack is not the same as being resilient. Every minute of downtime is measurable damage: financial, reputational and regulatory. In an environment where attacks are adaptive, automated and distributed, readiness is continuous work.

If there’s a single throughline from the interviews, it is this: defending the AI era requires a posture that blends machine speed with human governance. Treat AI as infrastructure that can be instrumented, tested, and switched off. Treat identity as the choke point through which control must flow; and treat vendor AI as conditional trust: auditable, mediated, and reversible. “The most effective action will be by investing in awareness and training on the risks of using AI technology, and give tools to the end user to use it properly and securely,” says Bar-Yosef.

While there is no simple fix, there is a clear guide: validate continuously, instrument the AI stack, unify identity, and re-anchor responsibility with human operators trained for a different kind of fight. Because when your protections are only as good as your last test, the future belongs to those who test faster and govern smarter.

“Identity is the new control point,” concluded Kovetz. “It’s the only layer capable of enforcing controls, detecting, and stopping AI-driven threats in real time. That’s where the future of defense will be decided.”