Google TPU momentum spurs talk of a post-Nvidia future

A sudden improvement in Gemini performance fuels speculation of a long-awaited shift in AI infrastructure.

“Holy shit. I’ve used ChatGPT every day for 3 years. Just spent 2 hours on Gemini 3. I’m not going back.” This post on X (formerly Twitter) was written on Monday by Marc Benioff, the founder and CEO of software giant Salesforce, who has more than a million followers. Benioff frequently comments on and marvels at the artificial intelligence revolution on his X account, even though its rapid development also poses a direct threat to the company he built.

The post was not the only one praising the new version of Gemini that Google released last week. Google’s stock jumped 6% on Monday, and the positive momentum continued on Tuesday. The company is now up 70% since the beginning of 2025. The rally nearly pushed Google to a $4 trillion valuation, a milestone previously reached only by Nvidia, Microsoft, and Apple, and it appears poised to remain in that territory.

1 View gallery

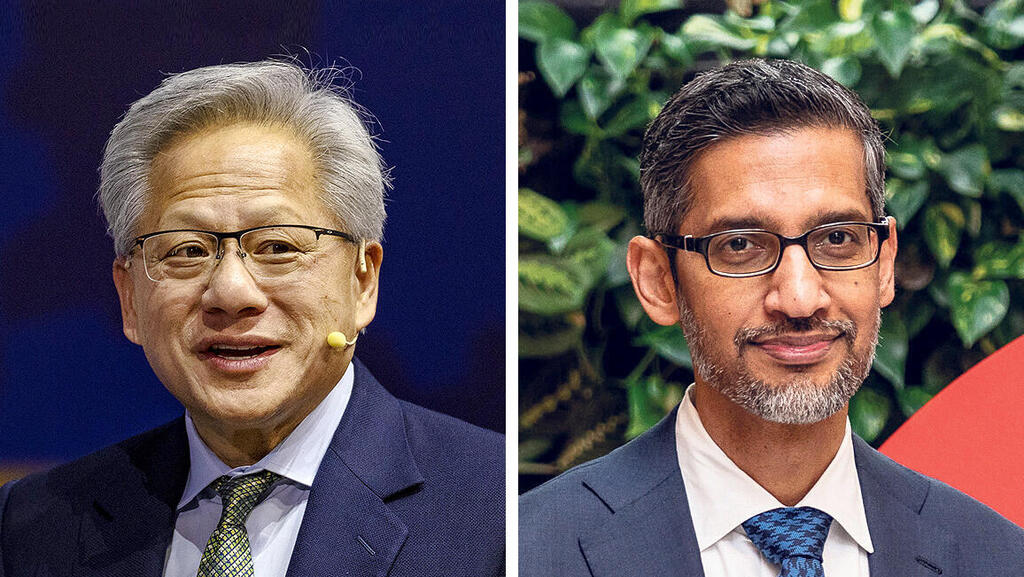

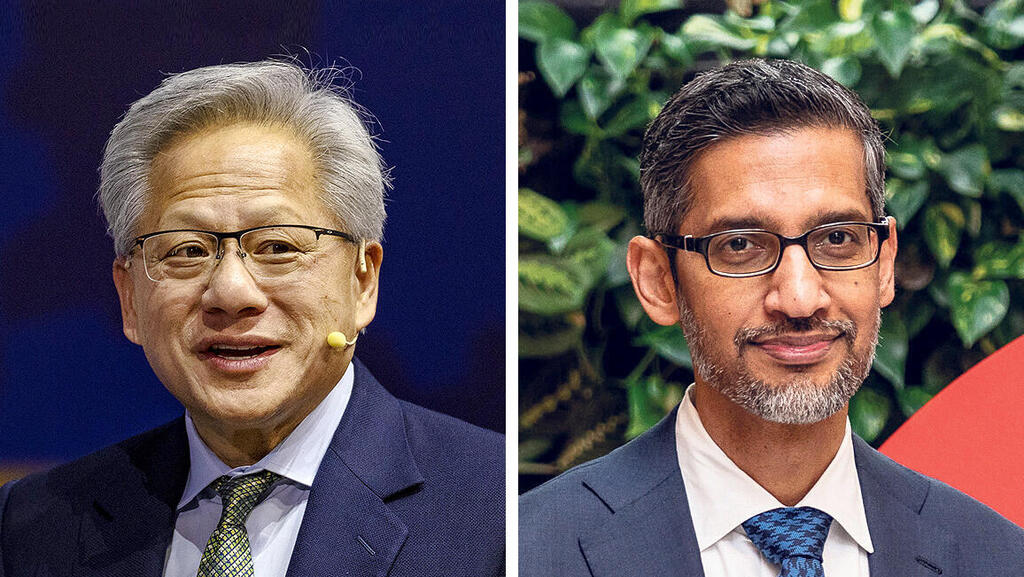

Nvidia CEO Jensen Huang, Google CEO Sundar Pichai

(Photos: Damian Lemanski/Bloomberg, Ezra Acayan/Getty Images)

A Shift in the AI Conversation

Google’s surge is driven by something far deeper than a successful new chatbot. Unlike its competitors, Gemini was trained and runs on chips developed by Google itself rather than on Nvidia’s chips. Google’s chip, called a Tensor Processing Unit (TPU), operates differently from Nvidia’s Graphics Processing Unit (GPU), which has dominated the AI market.

Until recently, Gemini faced repeated failures, from overly rigid political correctness to generating historically inaccurate images, including depicting a Black woman as a former pope and showing Nazi soldiers in various racial identities. Google ultimately suspended Gemini’s ability to generate images of people, and CEO Sundar Pichai issued a public apology. Suddenly, however, users are reporting that Gemini is more accurate and coherent than OpenAI’s ChatGPT and performs better on complex tasks.

But even Gemini is not the main story. What drove the sharp rise in Google’s stock, and replaced talk of an “AI bubble,” is the possibility that a crack has opened in Nvidia’s monopoly. Nvidia is not going anywhere and will continue to be the world’s most powerful tech company, or at worst one of them. But the surprising capabilities demonstrated by Google may signal a genuine path to reducing dependence on Nvidia, or, as it is known in the chip world, lowering the “Nvidia tax.” That tax, expressed in extremely high chip prices and total dependence on Nvidia’s delivery timelines, is paid today by every company building or using AI systems. In other words: nearly every company on Earth.

Although Nvidia founder and CEO Jensen Huang said last week that customer prioritization is based on purely business considerations, specifically, on whether buyers can use the chips immediately rather than stockpiling them, there have long been reports that Silicon Valley titans like Oracle’s Larry Ellison and Elon Musk have pressed Huang for preferential treatment.

For Wall Street investors, jittery from cycles of extreme optimism and extreme pessimism about AI, even a hint of competition for Nvidia offers a sigh of relief. A more competitive landscape could ease the massive spending commitments that companies from Meta to Amazon and Microsoft are making to keep pace with the AI revolution.

Fuel was added to this fire on Tuesday when reports emerged in the U.S. that Mark Zuckerberg’s Meta is considering switching to Google processors in its server farms starting in 2027. That possibility made the dream of an alternative to Nvidia’s supply chain feel far more real, and sent thousands of people who had just learned what a GPU is scrambling to ask ChatGPT or Gemini: So what exactly is a TPU?

Google launched the first generation of TPU chips back in 2018, designed initially for internal cloud infrastructure tasks such as storage, search, and its own search engine. As AI technologies advanced, Google recognized the chip’s natural evolution toward training large language models (LLMs). This explains the early signs of an advantage now emerging over Nvidia’s GPUs, which were originally built for demanding graphics in video games rather than mathematical optimization at AI scale. GPUs eventually proved suitable for AI and became a general-purpose solution. TPUs, however, were engineered specifically for AI.

As a result, Google’s processors are more efficient, capable of performing more operations per second while consuming less energy. In a world where Nvidia chips are scarce and energy infrastructure is becoming the new bottleneck, to the point where the U.S. is planning nuclear power plants solely for AI data centers, TPU efficiency is a meaningful advantage. This matters not only for training models but increasingly for inference.

Fears of losing control over the AI chip market sent Nvidia’s stock down, with its valuation now around $4.3 trillion, now not far from Google’s. After Nvidia’s fall and Google’s rise, their forward earnings multiples have narrowed, making Nvidia appear far cheaper than it did a week earlier.

Breaking Free From Nvidia

Google’s internal chip needs remain enormous, so it is not at all certain that it will rush to sell TPUs broadly. The competitive advantage they give Google, reducing dependence on Nvidia, is critical, especially in the cloud computing battle where Google still trails Amazon and Microsoft.

A new domino effect is also emerging. While attention focused on the spike in Google’s stock and the drop in Nvidia’s, other companies quietly posted notable movements. The biggest winner was Broadcom, whose stock jumped, pushing its valuation toward $2 trillion.

Broadcom, Google’s development partner, designs Google’s chips. If Meta eventually buys TPU chips, it will also be paying Broadcom. The company develops chips for other clients as well, though strict confidentiality is standard in these relationships. Another rising player is Marvell, a smaller competitor in the market for customized chips like Google’s TPU. Like Mellanox, now part of Nvidia and central to its AI offerings, Marvell also provides high-speed connectivity solutions for server farms.

Chip manufacturers are also poised to benefit from Google’s entry into the high-end AI chip race. These include East Asian giants such as TSMC, Samsung, and Micron, all of whom stand to profit regardless of whether they manufacture chips for Google or Nvidia.