Opinion

Responsible AI-driven healthcare: A goal within reach

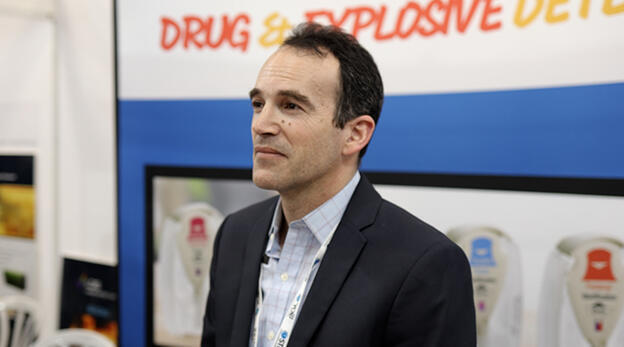

"We are fortunate to be at a point where cutting edge science can be applied to one of the most fundamental human goals, delivering better care to every patient at the right time," writes Prof. Ran Balicer, Chief Innovation Officer and Deputy D.G. at Clalit Health Services and Chair of the National HealthTech AI Summit.

Large language model based chat tools such as ChatGPT have become part of everyday professional life in many domains, even though they notoriously unable to provide reliable accuracy. Over the past year, this tension has been highlighted repeatedly, for instance in cases in which lawyers were fined after submitting briefs that contained fabricated citations generated by AI.

In healthcare, the margin for error is far smaller. The concern is not limited to so-called hallucinations - equally troubling are issues of bias and value misalignment, especially when AI systems learn from existing patterns of care that are themselves flawed. In cardiovascular medicine, for example, women continue to be misdiagnosed at higher rates than men. Systems trained on such data risk perpetuating these disparities and, in some cases, deepening them.

These worries are justified, and they are compounded by the growing difficulty regulators face in keeping up with rapid technological change. In discussions held within the United Nations High-Level Advisory Body, a recurring theme emerged. There are real dangers in adopting AI carelessly, but there are also risks in failing to adopt it at all. The latter are often less visible, yet no less consequential.

Healthcare offers a stark illustration. According to a recent OECD working paper, roughly 15 percent of diagnoses across developed countries are incorrect, delayed, or inaccurate. The economic impact of these human errors is estimated at about 17.5 percent of healthcare spending, or close to 1.8 percent of GDP. These figures are likely conservative, and the pressures driving them, aging populations, rising complexity of care, and workforce shortages, are only intensifying.

Yet against this grim backdrop, the risk-driven public discussion seldom addresses this “Status Quo Risk” – of not introducing better tools. It is rarely measured or openly discussed, even though it carries very real costs for patients and health systems, that can be alleviated by AI tools. They can help improve quality, extend access, and support earlier and more precise interventions, including for populations that are traditionally underserved.

Public perception presents an additional challenge. Studies consistently show that people are far less forgiving of mistakes made by machines than of similar errors made by humans. Human error is often accepted as unavoidable, while algorithmic error is seen as unacceptable. This imbalance helps explain resistance to autonomous vehicles in many countries, despite evidence that their overall safety may exceed that of human drivers. Healthcare is subject to the same dynamics.

One of Israel’s distinguishing strengths in artificial intelligence, as in cybersecurity, lies in practical implementation rather than abstract capability. In healthcare, Clalit Health Services has built this strength over time. Fifteen years of focused investment in research, development, and deployment have allowed Clalit to move well ahead of many large and well resourced health systems that are only now beginning to introduce AI into routine care.

From the beginning, the emphasis was on machine learning solutions that avoid the risks associated with generative hallucinations and that address bias explicitly rather than retrospectively. Today at Clalit and its 5 million members, these systems generate AI driven recommendations that support proactive, predictive, and preventive care. Physicians review them daily, and more than 100,000 recommendations each month are adopted by GPs and adopted as concrete clinical action. Studies out of Clalit published in the leading scientific journals have shown improvements of up to 100-fold (!) in certain diagnostic and treatment capabilities. Very few organizations can point to that level of sustained, real world impact.

As transformer-based AI tools mature, it is becoming clear that in the future we will not view these simply as another set of tools. In clinical practice, AI has already progressed from a “whisperer” quietly offering advice to providers, to an active “navigator” that helps prioritize cases and direct patients to specific streams of care provision. In the near future, AI-driven agents will begin to function more as a “frontline caregiver” for simple low-risk scenarios. This shift requires a change in mindset - AI must be treated more like a colleague or trainee, one that requires supervision, accountability, and clear boundaries.

This is why regulation, when done thoughtfully, enables progress rather than blocking it. Clalit was the first healthcare organization in Israel to establish a comprehensive internal regulatory framework for AI. This framework known and published as OPTICA (Organizational PerspecTIve Checklist for AI solutions adoption), this framework has been published in leading academic journals and cited by international bodies such as the OECD and the World Health Organization. It is now being examined in several countries as a possible foundation for broader AI governance.

At the same time, Israel faces real gaps that must be addressed to maintain its leadership in AI and healthcare innovation. Shortcomings in training, data infrastructure, computing and energy capacity, and investment in applied pilots were highlighted this year by the Nagel Committee. The establishment of a National Artificial Intelligence Authority creates an important opportunity to tackle these issues in a coordinated way.

Many of these issues were thoroughly discussed at the National HealthTech AI Summit held on December 29 in Tel Aviv, the largest gathering ever in Israel dedicated to AI in healthcare. More than 1,000 clinicians, health system leaders, technology executives, and internationally recognized researchers took part, staying through a full day of presentations and discussions. The atmosphere felt less like a conventional conference and more like climbing a real summit for us at Clalit- a moment to take stock after fifteen years of steady progress and to look ahead.

We are fortunate to be at a point where cutting edge science can be applied to one of the most fundamental human goals, delivering better care to every patient at the right time. With responsible design, thoughtful regulation, and real world experience, AI driven healthcare is no longer a distant promise. It is already taking shape.

The author is Chief Innovation Officer and Deputy D.G. at Clalit Health Services and Chair of the National HealthTech AI Summit.