Nvidia’s $20 billion Groq deal: Talent and technology over traditional acquisition

The transaction underscores the urgency of AI competition and the need to bypass regulatory delays.

The intensity of the AI race, and the lengths companies will go to gain even a slight advantage, was made clear on Wednesday with the announcement of a deal between AI chip giant Nvidia and chip startup Groq (not to be confused with Elon Musk's Grok).

Just three months ago, Groq raised $750 million at a $6.9 billion valuation. On Wednesday, Nvidia agreed to pay, according to some reports, $20 billion, a deal that includes non-exclusive access to Groq’s technology and the transfer of senior employees, led by founder and CEO Jonathan Ross, to Nvidia. $20 billion not to acquire the company, not to acquire its intellectual property, nor even for an exclusive use agreement. Only for permission to use the technology and recruit its people. The sum is more accurately described as an extraordinarily generous signing bonus.

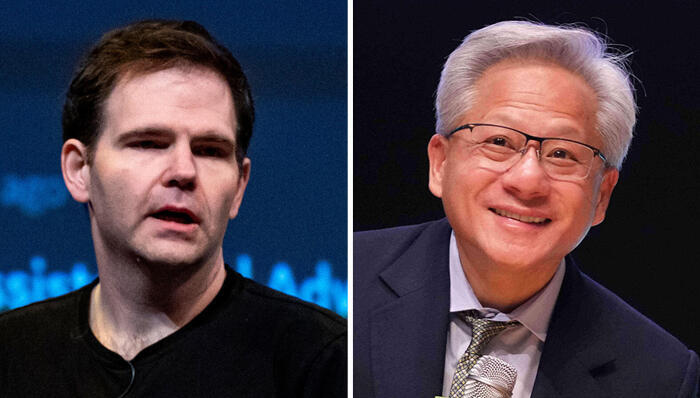

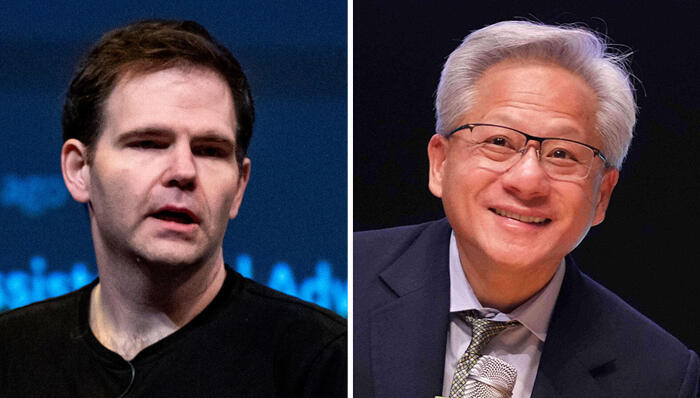

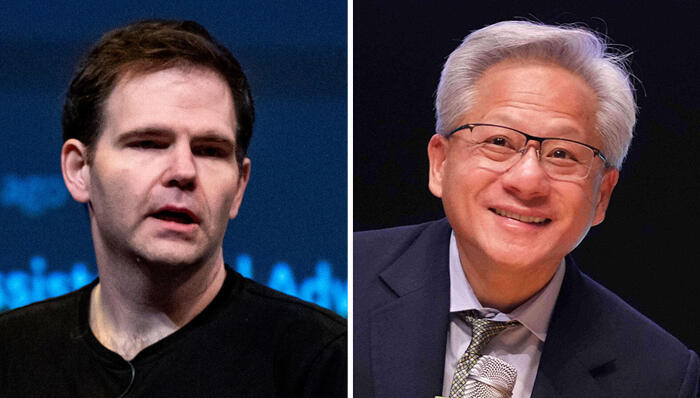

1 View gallery

Jensen Huang (right) and Jonathan Ross.

(Photos: AP/ Lee Jin-man ,David Paul Morris/Bloomberg)

Why is Nvidia doing this? The AI race is so intense, and the fight for an advantage so critical, that companies cannot afford the time-consuming regulatory processes required for major acquisitions. This approach gives Nvidia exactly what it wants: access to the technology and the engineers who know how to take it to the next level.

Groq was founded in 2016 by a team of engineers from Google, led by Ross, one of the developers of Google’s Tensor chips, a key asset in the tech giant’s Gemini project to compete with OpenAI’s ChatGPT. Groq’s AI chips are designed for the inference phase of AI models, the stage where users ask ChatGPT or Gemini to answer questions, analyze information, or generate images and videos. In some ways, these chips complement Nvidia’s AI chips, which are primarily used for model training. Inference chips are generally more expensive, less energy-efficient, and slower to deploy.

Nvidia’s success over the past three years, which has propelled it to a market value of $4.6 trillion, the highest in history for a public company, has relied heavily on demand for its training chips. But estimates suggest the market focus will soon shift toward inference chips, driven in part by DeepSeek’s breakthrough a year ago, which enables significant resource savings in model training, and by the growing use of AI models and AI-powered applications across industries.

For Nvidia, a key part of its strategy has been offering customers an integrated solution: not just AI chips, but ultra-fast communication chips (developed in its Israeli R&D center), software, and a complete ecosystem. Adding Groq’s inference technology allows Nvidia to expand this ecosystem further.

In a LinkedIn post, Ross said: “Today Groq entered into a non-exclusive licensing agreement with Nvidia for Groq’s inference technology. Along with other members of the Groq team, I’ll be joining Nvidia to help integrate the licensed technology. GroqCloud will continue to operate without interruption.”

Nvidia CEO Jensen Huang told employees that the company plans “to integrate Groq’s low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads.”

Huang added that, “While we are adding talented employees to our ranks and licensing Groq’s IP, we are not acquiring Groq as a company.”

Groq, for its part, will continue operating independently, with Simon Edwards taking over Ross’s role.

Nvidia, which has long dominated AI chips, faces emerging competition. Google, Amazon, OpenAI, and Meta are developing their own chips due to limited Nvidia supply, high prices, and the desire for supply chain control. If these efforts succeed, Nvidia’s market share could shrink. The Groq deal expands Nvidia’s offerings and speeds its market response, avoiding the lengthy regulatory approvals required for traditional acquisitions.

Nvidia is not alone in pursuing this approach. In June, Meta invested $14 billion in Scale.AI, acquiring access to technology and employees. About a year and a half ago, Google made a similar deal with Character.AI, and a few months earlier, Microsoft did the same with Inflection AI. These arrangements let companies access key technology and talent without the delays of conventional mergers.

However, there are downsides. After Meta acquired Scale.AI, two of its largest customers, Google and OpenAI, stopped working with it. The company’s demand surged elsewhere, but it also laid off 200 employees (14% of its workforce) and terminated contracts with 500 external suppliers. Microsoft and Google’s deals with Inflection AI and Character.AI similarly left those startups as “hollow shells.”

Still, the urgency and scale of the AI market mean that tech giants, and investors, are unlikely to be deterred. The financial incentives are too large, and the race is too fast-moving.